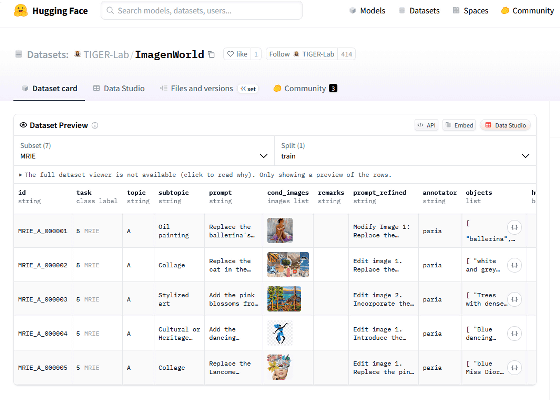

The ImagenWorld benchmark, which measures the performance of any image generation AI, evaluates the accuracy of image generation and image editing and identifies weak areas.

The performance of image generation AI is often evaluated in a format that is difficult to quantify, such as 'Can it generate beautiful images compared to other AI?' ' ImagenWorld ' is a benchmark developed by a research team at the University of Waterloo and Comfy.org, which quantitatively evaluates the performance of various AIs by assigning them tasks such as image generation and image editing.

ImagenWorld: Stress-Testing Image Generation Models with Explainable Human Evaluation on Open-ended Real-World Tasks

Introducing ImagenWorld: A Real World Benchmark for Image Generation and Editing

https://blog.comfy.org/p/introducing-imagenworld

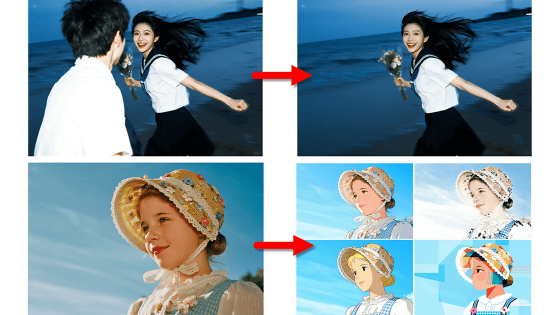

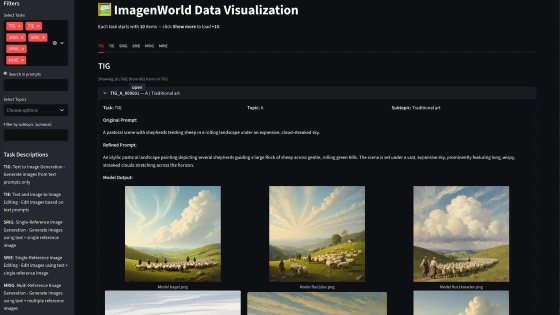

ImagenWorld tests six types of tasks: 'Text-based image generation (TIG),' 'Text-based image editing (TIE),' 'Single image and text-based image generation (SRIG),' 'Single image and text-based image editing (SRIE),' 'Multiple image and text-based image generation (MRIG),' and 'Multiple image and text-based image editing (MRIE).' The AI generation results are evaluated based on criteria such as 'Does the prompt follow instructions?', 'Is visually consistent?', 'Does all elements make logical sense?', and 'Is the character string contained in the generated image illegible?'.

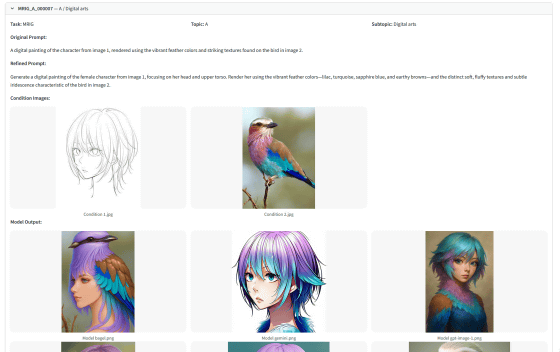

The ImagenWorld test contents and results are summarized on a page called ' ImagenWorld Visualizer .' For example, the following test instructs a user to 'color Image 1, a depiction of a woman, using the color and texture of the bird in Image 2.' Looking at the results, we can see that Gemini 2.0 Flash (bottom center) is able to output the results as instructed, while BAGEL (bottom left) and GPT-Image-1 (bottom right) output images that are far from the instructions.

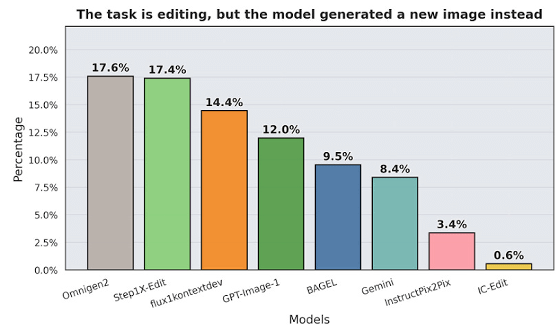

The research team has already conducted ImagenWorld tests on several image-generating AIs and published their findings. The graph below shows the percentage of AIs that ignored the original image and generated an entirely new one when given the task of editing an image. Even cutting-edge models like Gemini 2.0 Flash ignored instructions 8.4% of the time.

In addition, many AIs struggled with the task of 'adding elements from 'Image B' and 'Image C' to the positions indicated in 'Image A'.'

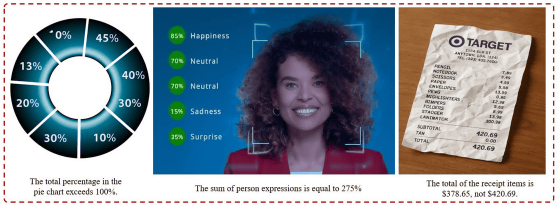

When generating 'percentage charts,' the total often exceeds 100%, and when generating receipts, the total price may be incorrectly listed.

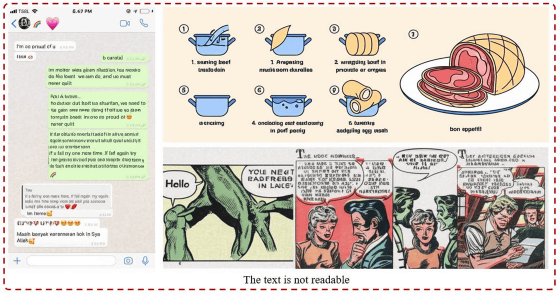

Generating text and explanatory illustrations is also a difficult task for most AIs.

The research team positions ImagenWorld as a 'framework for evaluating next-generation image generation AI models,' and says that by utilizing the evaluation in ImagenWorld, it will be possible to build a robust and reliable generation system.

The instructions and reference images included in ImagenWorld are available at the following link:

TIGER-Lab/ImagenWorld · Datasets at Hugging Face

https://huggingface.co/datasets/TIGER-Lab/ImagenWorld

Related Posts:

in AI, Posted by log1o_hf