DDN (Discrete Distribution Networks) is a new generative AI model with simple principles and unique properties.

DDN: Discrete Distribution Networks

https://discrete-distribution-networks.github.io/

[2401.00036] Discrete Distribution Networks

https://arxiv.org/abs/2401.00036

GitHub - DIYer22/discrete_distribution_networks: DDN: A novel generative model with simple principles and unique properties. (ICLR 2025)

https://github.com/DIYer22/discrete_distribution_networks

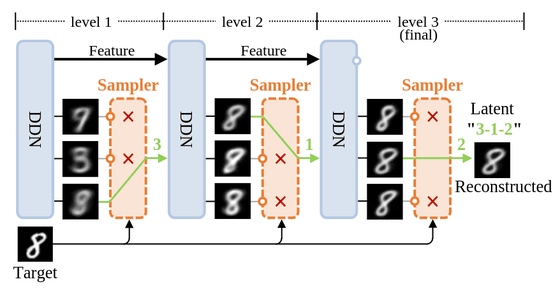

DDN is a new generative AI model that approximates data distributions using hierarchical discrete distributions . Its unique feature is that it allows the network to simultaneously generate multiple samples rather than a single output to capture distributional information.

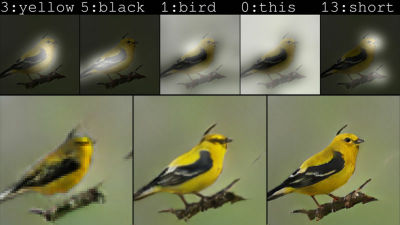

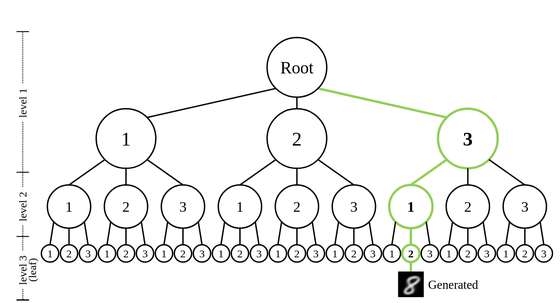

To capture finer details of the target data, DDN selects the output closest to the ground truth (GT) from the rough results generated in the first layer. The selected output from the first layer is then fed back into the network as a condition for the second layer, generating new outputs that are more similar to the GT. In this manner, through multiple layers, the representation space of DDN's outputs expands exponentially, and the generated samples become increasingly similar to the GT. This hierarchical output pattern of discrete distributions gives DDN its unique properties: more general zero-shot conditional generation and one-dimensional latent representations.

Below is a diagram showing DDN's multi-layer image generation process.

This mechanism of generating a large number of output candidates (splitting) and retaining only the most promising ones (prune) is called 'Split-and-Prune' and is considered an excellent mechanism for search and optimization. While

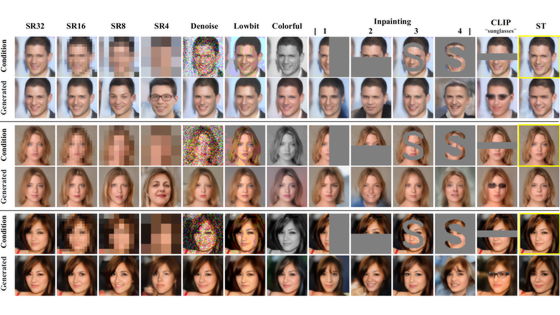

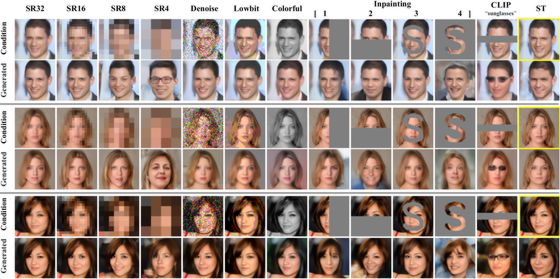

DDN supports non-pixel domains, especially gradient-independent zero-shot conditional generation, such as text-to-image generation using black-box models . The images in the yellow borders serve as ground truth, and the abbreviations in the table headers correspond to each task. 'SR' stands for Super-Resolution, and the following number indicates the resolution. 'ST' stands for Style Transfer, which uses conditions to calculate the perceptual loss.

The following diagram shows the tree-structured representation space of DDN latent variables. Each sample can be mapped to

The effectiveness and properties of DDN have been demonstrated through experiments using CIFAR-10 and FFHQ , datasets widely used in the fields of machine learning and computer vision.

Yang said the total time spent on DDN development was 'less than three months,' and added that 'the experiments were preliminary, and there was limited time for detailed analysis and tuning.' As a result, DDN 'still has significant room for improvement.'

Related Posts: