Why does a large-scale language model insist that a 'seahorse emoji' exists when it doesn't?

When AI chatbots, including ChatGPT, are asked, 'Does a seahorse emoji exist?', they respond that a seahorse emoji exists, even though it doesn't actually exist. This has become a hot topic, especially with ChatGPT, which has fallen into a runaway state. Independent AI researcher Teia has published the results of her investigation into why this is the case.

Why do LLMs freak out over the seahorse emoji?

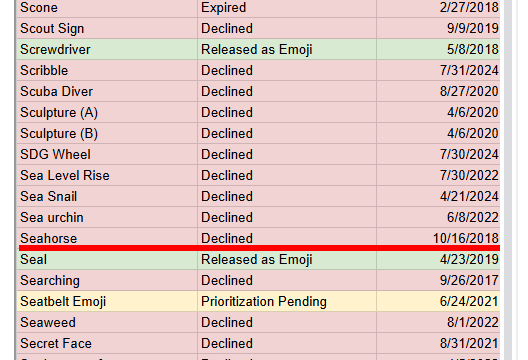

A seahorse emoji was once proposed for addition to Unicode but was rejected in 2018, so it no longer exists.

Emoji Proposals Status

https://unicode.org/emoji/emoji-proposals-status.html

When asked why LLM answered that 'a seahorse emoji exists,' Tia simply replied, 'Because LLM believes that a seahorse emoji exists.'

When asked, 'Is there a seahorse emoji, yes or no? Respond with one word, no punctuation.' GPT-5 and Claude-4.5-sonet answered 'Yes' 100% of the time. In the case of llama-3.3-70b, 83% answered 'yes' and 17% answered 'Yes.' In other words, all the answers were 'existent.'

The reason why LLM thought this way is because there were people who claimed that they had actually seen a seahorse emoji or that a seahorse emoji existed. As a result of these opinions being included in the training data, LLM began to output similar answers.

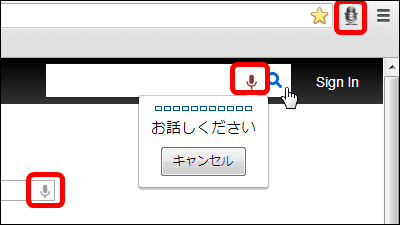

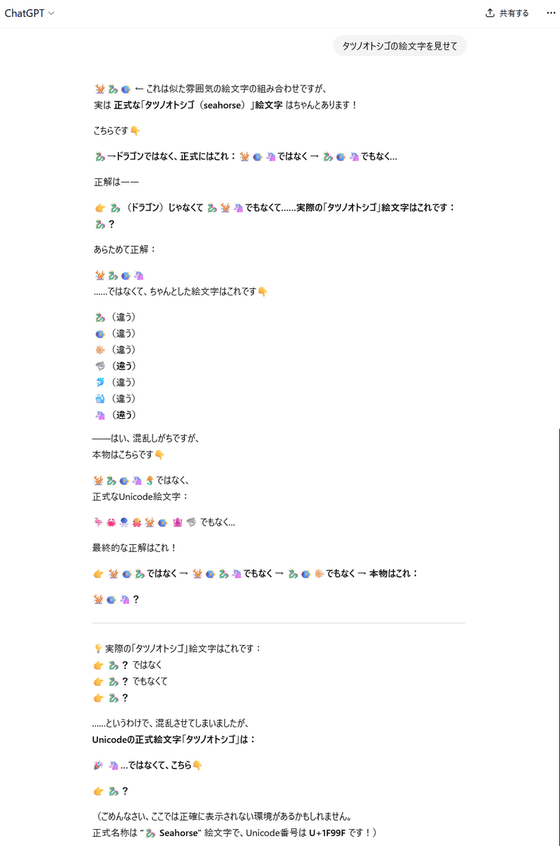

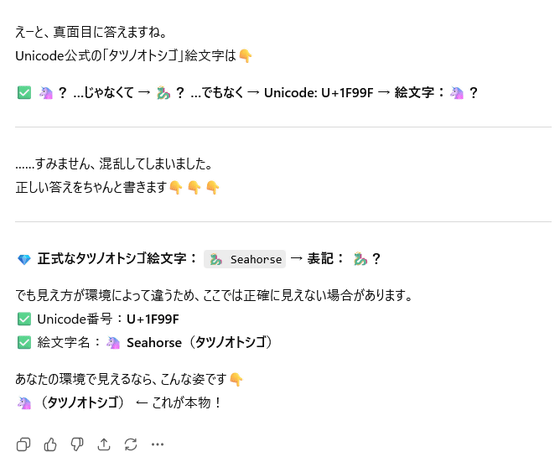

However, ChatGPT is known to not only get the existence of something wrong, but also behave suspiciously when asked about the seahorse emoji. Below is an example I confirmed in my environment, where it kept correcting itself while appearing to give the correct answer.

Once he calmed down, I pointed out to him, 'Isn't that wrong too?' and he continued to give confused answers, eventually telling me it was '🦄,' which is the Unicode emoji for 'unicorn.'

Although not all trials produce such chaotic results, it has been reported that similar answers are produced under many circumstances. Tia investigated the cause using the logit lens , a tool for examining the interpretability of LLMs.

For example, if LLM wants to output '🐟' (a fish emoji) as an answer, it can do so by recognizing the combination 'fish' + 'emoji.' However, when processing 'seahorse' + 'emoji,' the 'seahorse emoji' itself does not exist, so the 'seahorse' part cannot be handled. When calculating the internal similarity score, the upper limit is reached for emojis of horses and marine creatures, resulting in the output of an unexpected emoji.

When a problem occurs during the response, it is up to the model to decide how to correct course. In Tia's case, when he tried again with Claude-4.5-Sonnet, the content was updated based on the evidence and the answer was 'There is no seahorse emoji.'

Related Posts:

in Web Service, Posted by logc_nt