NVIDIA open-sources 'Audio2Face,' a system that generates 3D avatar facial animation from voice, achieving lip sync that perfectly matches the voice

NVIDIA has open-sourced Audio2Face , an AI-powered tool that generates realistic facial animations for 3D avatars based on audio, enabling developers to use Audio2Face and its underlying framework to create realistic 3D characters for games and apps.

NVIDIA Open Sources Audio2Face Animation Model | NVIDIA Technical Blog

Nvidia is letting anyone use its AI voice animation tech | The Verge

https://www.theverge.com/news/785981/nvidia-audio2face-ai-voice-animation-open-source

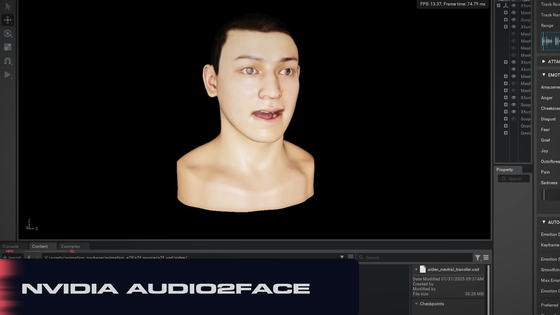

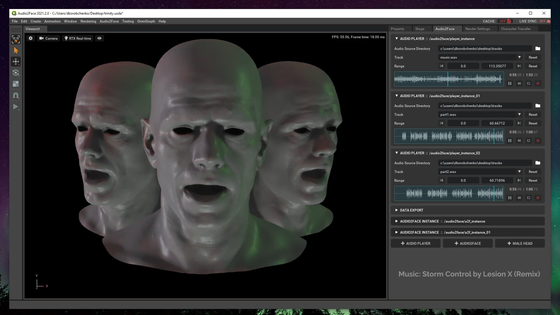

NVIDIA's Audio2Face is a technology that analyzes the acoustic characteristics of speech to generate animation data that maps to facial expressions and lip movements of a 3D avatar. You can see how the animation is actually generated by watching the video below.

NVIDIA ACE | New Audio-Driven AI Facial Animation Features Coming to NVIDIA Audio2Face - YouTube

Audio2Face uses AI to analyze the acoustic characteristics of input speech, such as phonemes and intonation, to create a stream of animation data, which is then mapped to the character's facial expressions, resulting in accurate lip syncing.

Audio2Face has already been widely adopted in games, video production, advertising, and more. For example, in EA Sports' racing game ' F1 25 ,' Audio2Face is used for the animations in scenes where characters in the game converse. In the video below, you can see the development screen and in-game animations from around 6 minutes and 15 seconds into the playtime.

F1 25 Authenticity & Customization Deep Dive - YouTube

The open source projects include the Audio2Face model for lip sync generation, the Audio2Emotion model for emotional state estimation from speech, the Audio2Face software development kit (SDK) and plugin for Autodesk Maya, the plugin for Unreal Engine 5, the training framework for the Audio2Face model, and sample training data for the Audio2Face model. Each data can be downloaded from the following pages.

ACE for Games | NVIDIA Developer

https://developer.nvidia.com/ace-for-games#section-getting-started

The source code is also hosted on GitHub.

GitHub - NVIDIA/Audio2Face-3D-SDK: High-performance C++/CUDA SDK for running Audio2Emotion and Audio2Face inference with integrated post-processing.

https://github.com/NVIDIA/Audio2Face-3D-SDK

Related Posts: