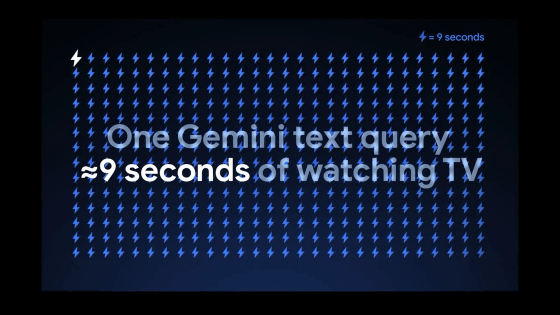

Each Gemini uses the same amount of electricity as watching television for 9 seconds and 5 drops of water.

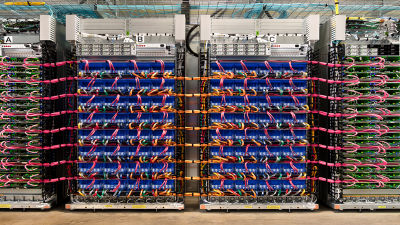

When using AI systems like Gemini and ChatGPT, sophisticated calculations are performed in data centers somewhere around the world, consuming a huge amount of electricity for the calculations and water for cooling the machines. Google has published its own calculations on the energy situation of such AI.

Measuring the environmental impact of AI inference | Google Cloud Blog

AI has developed rapidly, and in just a few years, performance and energy efficiency have improved dramatically. According to Google, Gemini's energy consumption has been reduced by 33 times and its total carbon emissions by 44 times in the past year.

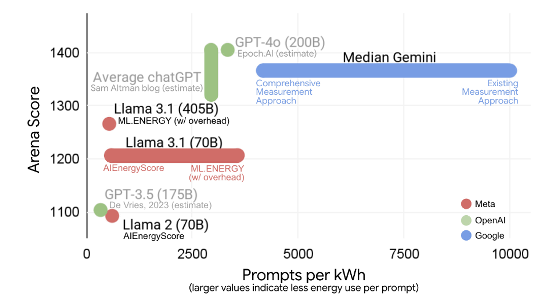

In the graph below, the vertical axis shows 'AI performance (the higher the higher the performance)' and the horizontal axis shows 'the number of tokens that can be processed per kWh of electricity (the more energy efficient the further to the right).' Google's Gemini is more energy efficient than Meta's Llama 3.1 and OpenAI's GPT-4o.

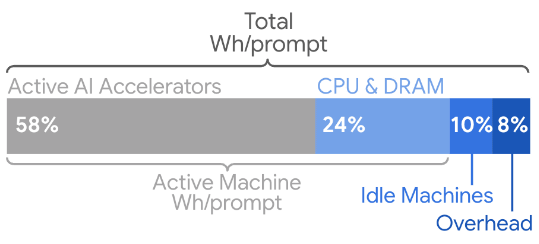

While several organizations have published their own calculations of AI energy efficiency, these figures do not necessarily reflect actual operational performance. The graph below shows the percentage of power consumed by AI data centers, including 'AI processing chips such as GPUs and TPUs,' 'CPUs and DRAM,' 'idle machines,' and 'power for operating the data center.' It can be seen that power other than AI processing chips accounts for 42% of the total. To accurately calculate AI power consumption, these power sources must also be taken into account.

Google added additional criteria to calculate values closer to actual operations, such as 'taking into account idle machines waiting to ensure reliability,' 'considering CPUs and DRAM in addition to AI processing chips such as GPUs and TPUs,' and 'considering not only computing machines but also data center cooling and power distribution systems.' As a result, Gemini revealed that the median power consumption per prompt was 0.24Wh, carbon dioxide emissions were 0.03g, and water consumption was 0.26ml. This is equivalent to the amount of energy consumed watching television for nine seconds or consuming five drops of water.

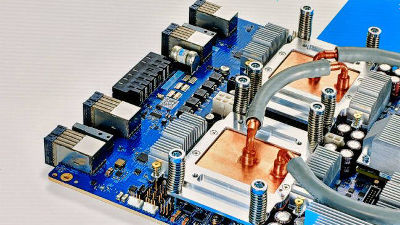

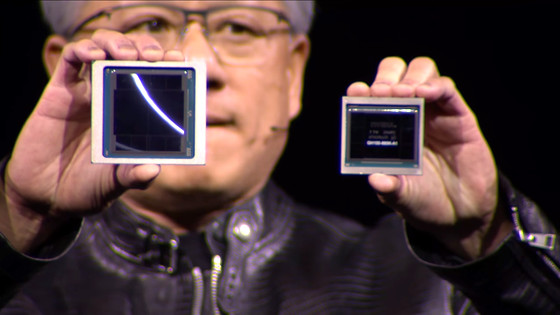

Google attributes this success to the energy-efficient algorithms it selected during the development of Gemini and the power efficiency of its AI processing chip,the TPU . Google also stated that it will continue to work on reducing its power and water consumption.

The specific method used by Google to calculate power and water consumption is summarized in the following paper.

Measuring the environmental impact of delivering AI at Google Scale

(PDF file) https://services.google.com/fh/files/misc/measuring_the_environmental_impact_of_delivering_ai_at_google_scale.pdf

Related Posts: