The diagrams of prompt engineering that appear in the book 'Prompt Engineering for LLM' by the developer of the AI coding assistant GitHub Copilot are easy to understand.

Alex Strick van Linschoten - Assembling the Prompt: Notes on 'Prompt Engineering for LLMs' ch 6

https://mlops.systems/posts/2025-01-13-assembling-the-prompt:-notes-on-prompt-engineering-for-llms-ch-6.html

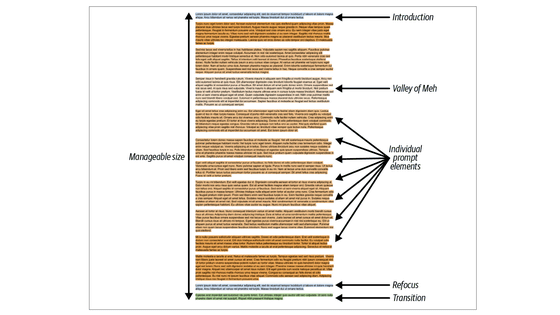

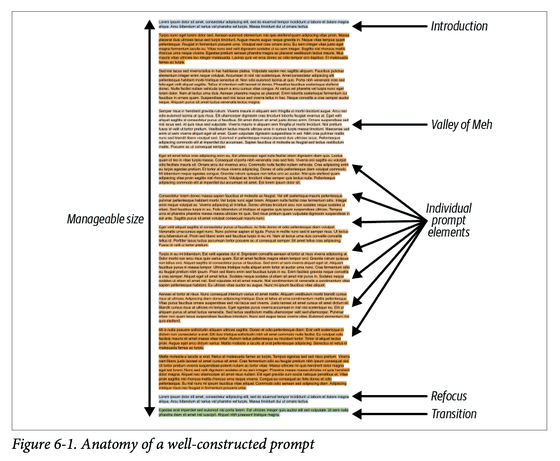

At the beginning of Chapter 6 of 'Prompt Engineering for LLMs,' there's a great diagram that helps you understand the 'structure of a well-constructed prompt.' The diagram is below. The overall size is 'manageable,' with an 'Introduction' at the beginning to introduce the task, and 'Individual Prompt Elements' in the middle. Finally, the prompt 'refocuses' on the task. The middle section is described as the 'Valley of Meh,' which apparently means that LLMs have difficulty processing the information.

Linschoten argues that there are two important clues in this diagram: the closer the information is to the end of the prompt, the more impact it has on the model, and the model often struggles with information crammed into the middle of the prompt.

In 'LLM Prompt Engineering,' a prompt and its resulting completion are defined as a 'document.' There are various document templates, such as 'advice/conversation,' 'analysis report,' and 'structured document.' For example, an analysis report-type document provides a lighter 'cognitive load' for the LLM because it does not require processing complex social interactions like advice/conversation.

Tips for creating analytical report-type documents include having a table of contents at the beginning to set the stage and a notepad or memo section for the model to think about.

'Structured documents are very powerful, especially when models are trained to expect a particular type of structure (JSON, XML, YAML, etc.). In fact, OpenAI's models have proven to be very powerful when dealing with JSON as input,' Linschoten wrote.

The context that is inserted into the prompt can be small or large depending on the context window and latency requirements. There are different ways to select the context to insert.

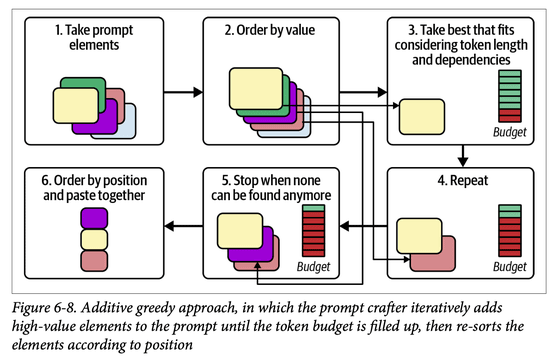

Other considerations for creating a well-constructed prompt include the order in which all elements appear in the prompt, how much will removing this element from the prompt affect the response, and whether adding one element will remove another.

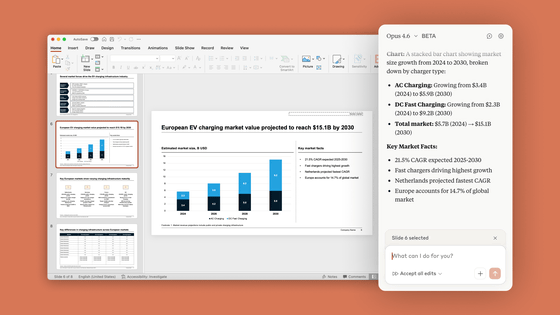

The diagram below shows the flow for creating a well-structured prompt. First, '1: Select the prompt elements,' then '2: Sort each element by value,' then '3: Select the best one, taking into account token length and dependencies,' then '4: Repeat,' then '5: Stop repeating when no more elements are found,' and finally '6: Sort and combine the positions of each element' to finish.

The Japanese version of 'LLM Prompt Engineering' is also available, and the selling price on Amazon.co.jp is 4,180 yen including tax. In addition, explanatory blogs and explanatory slides for 'LLM Prompt Engineering' are also available, so if you are interested in the contents, you may want to check them out before purchasing the book.

Amazon.co.jp: LLM Prompt Engineering: Generative AI Application Development from the Developers Who Created GitHub Copilot: John Berryman, Albert Ziegler, Yuki Hattori (translator), Nao Sato (translator): Books

Related Posts:

in Software, Posted by logu_ii