What will AI tools be like that augment human strengths?

With the development of large-scale language models, AI can now process the kind of language (natural language) that humans use on a daily basis. AI, which is beginning to be incorporated into various systems due to its convenience, is sometimes feared to reduce human creativity and create people who become too dependent on AI. Software engineer Hazel Weekley points out that this situation could be improved by using AI tools that enhance human strengths.

Stop Building AI Tools Backwards | Hazel Weakly

Weekly believes that existing AI only gives 'answers' one-sidedly, and does not enhance human thinking, so humans are not able to fully utilize the value of AI. Weekly states that 'humans have their own strengths' and offers the following thoughts:

'First, humans cannot truly learn by simply inputting information into their brains; they can say they have learned by outputting the input information. Second, what do we learn? The most effective way to learn is not knowledge but process. For example, if you want to learn how to bake a cake, you cannot say you have actually learned by simply memorizing the cooking steps. Third, how do we level up? Humans are very bad at new innovations, and with technology, it is often up to the individual skill of the developer. However, humans are inherently optimized for communities, so it should be possible for humans to learn collectively through communities and accumulate knowledge through imitation and repetition.'

Putting all these ideas together, humans should be able to learn through a process that requires some effort, accumulate knowledge, learn iteratively, and solve problems collectively. However, AI skips all of this and just presents the 'answer.' Weekly points out that 'AI is bad at what humans are good at, but for some reason we're trying to get AI to do what humans are good at.'

As a result, humans will downskill themselves faster than they can improve AI, and they will no longer be able to provide AI with high-quality data to enhance human excellence, which will prevent humans from improving AI, Weekly said.

However, this could potentially be improved with just a little extra work on the AI.

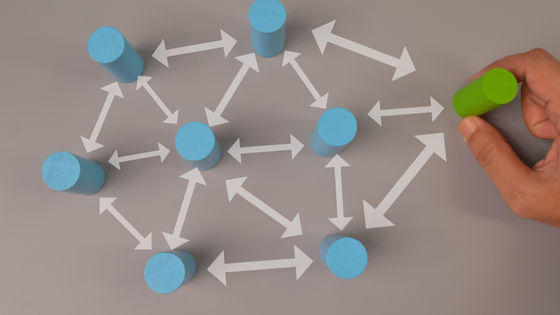

The AI that Weekly is looking for is not an AI that presents 'answers,' but an AI that shows 'solutions' and makes humans think. Instead of immediately giving the answer, it reminds humans of what they should do one by one and makes them think. For example, instead of immediately providing search results in response to a human prompt, it converts the human prompt into a search query and lets the AI search for itself.

In addition, when a human gives a prompt like 'I'm having trouble with ____,' existing AI will answer all the possible main causes first. However, Weekly argues that AI should respond with questions that stimulate human thought, such as 'What exactly are you having trouble with?' and 'Can you tell me the steps you're trying to take?'

'AI that tries to replace the things humans are really good at will degrade people's skills,' said Weekly. 'Humans who can reason and collaborate must prioritize building tools for reasoning and collaboration to support and enhance human-led processes. This will result in better tools, which in turn improve humans, creating a positive feedback loop. System tools require a revolutionary change in how they are designed, implemented and evaluated. But that change will not happen unless they are designed with humans at the center. Don't just put humans in the loop, remember that humans are the loop.'

Related Posts:

in Software, Posted by log1p_kr