OpenAI research reveals that doctors who use AI make 16% fewer diagnostic errors

A study on AI Consult, an AI system for healthcare that only activates when there is a possibility of an error, found that doctors using the AI system made 16% fewer diagnostic errors than those who did not. OpenAI reported the findings.

Pioneering an AI clinical copilot with Penda Health | OpenAI

OpenAI has partnered with

Clinicians see patients of all ages and with all kinds of illnesses in the same day, which requires a wide range of knowledge, but this complexity makes medical errors common. AI Consult helps with this, integrating into the electronic health record systems doctors use to flag mistakes in diagnosis, medication misadministration and incorrect patient instructions.

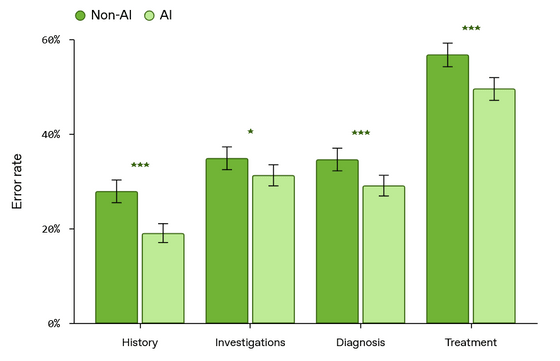

OpenAI selected 39,849 consultations from 15 clinics to investigate how AI Consult was helping them. Of the total, 20,859 consultations used AI Consult, and 18,990 did not. 108 doctors participated in the study, evaluating 5,666 randomly selected consultations and their documentation to identify errors.

The evaluation criteria were 'medical history,' 'appropriateness of examination,' 'accuracy of diagnosis,' and 'accuracy of treatment.' In all four of these areas, doctors who used AI made less mistakes than those who did not. Overall, the study showed that the introduction of AI Consult prevented 22,000 diagnostic errors and 29,000 treatment errors per year.

Doctors who used AI Consult said things like, 'The quality of patient care has improved,' 'It helped me make the right clinical decisions,' and 'It was also an excellent learning tool.'

When Penda Health introduced AI Consult, they provided one-on-one training and educated doctors on how to use it properly. This led doctors to proactively check error messages that were often overlooked as unimportant in the early stages of the implementation.

In addition, additional training and feedback was provided to the AI Consult, which had received general training, to ensure that it was adapted to the culture of Penda Health. For example, in the initial settings, an error message was displayed when a child's blood pressure was not measured, but because Penda Health does not regularly measure children's blood pressure, this error message was erased.

Based on these results, OpenAI points out that the challenge in integrating AI with real-world systems will not be the performance of the models, but filling the gap with real-world use cases.

OpenAI said, 'Concerted efforts across the medical AI ecosystem are essential to closing the gap between models and implementation. As we continue to research and expand AI systems, we hope that AI will become a trusted part of standard medical care and that this effort will provide inspiration and practical guidance for advancing the use of AI.'

Related Posts:

in Software, Posted by log1p_kr