Nagoya University researchers announce 'J-Moshi,' a voice dialogue AI model that perfectly reproduces Japanese backchannel responses

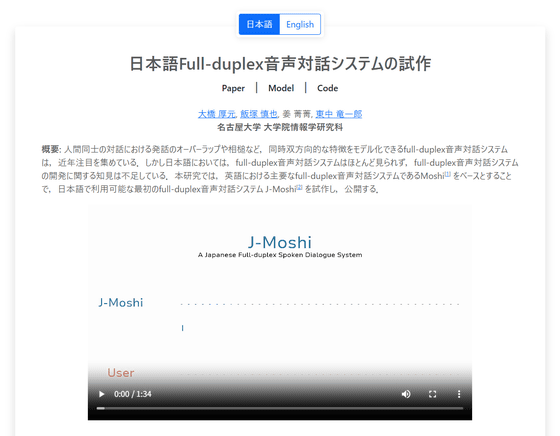

A research team from the Graduate School of Informatics at Nagoya University has announced a Japanese full-duplex spoken dialogue system called ' J-Moshi .' J-Moshi is groundbreaking in that it realizes simultaneous two-way spoken dialogue systems, which have been attracting attention in recent years, in Japanese as well. It is based on the Moshi full-duplex spoken dialogue system for English.

J-Moshi

https://nu-dialogue.github.io/j-moshi/

[2506.02979] Towards a Japanese Full-duplex Spoken Dialogue System

https://arxiv.org/abs/2506.02979

First publicly available Japanese AI dialogue system can speak and listen simultaneously

https://techxplore.com/news/2025-07-japanese-ai-dialogue-simultaneously.html

Natural Japanese conversations involve repeated responses such as 'I see' and 'Is that so?' more frequently than English, so to achieve natural conversations with AI, it is necessary to both 'speak' and 'listen' at the same time. However, conventional AI could not both 'speak' and 'listen', making it difficult for it to respond with backchannel responses.

A development team led by researchers from the Higashinaka Laboratory at the Graduate School of Informatics, Nagoya University, has developed J-Moshi by improving Moshi, a spoken dialogue system developed by Kyotoi , an open source AI research institute.

Moshi is an AI voice assistant that can express emotions in real time.

French AI research institute releases 'Moshi', an AI voice assistant that can express emotions in real time - GIGAZINE

J-Moshi took approximately four months to develop and was trained on multiple Japanese speech datasets.

J-Moshi is an AI system that perfectly mimics human speech, capturing the natural flow of Japanese conversation and even perfectly mimicking the 'backchannel' responses that Japanese speakers make during a conversation.

If you play the video below, you will hear how natural J-Moshi's audio output sounds.

The datasets used for training include the Japanese dialogue dataset J-CHAT , created and published by the University of Tokyo, which contains approximately 67,000 hours of audio data collected from podcasts and YouTube.

The team also used small but high-quality dialogue data sets for training, including audio data collected in the lab and data recorded 20 to 30 years ago. To increase the training data, the team used a speech synthesis program to convert chat conversations into artificial voices for training.

J-Moshi is also featured on Hugging Face.

nu-dialogue/j-moshi-ext · Hugging Face

https://huggingface.co/nu-dialogue/j-moshi-ext

Compared to English speech data, Japanese speech data is limited, making it difficult to adapt traditional voice dialogue systems to specific fields and industries. However, the development team points out that J-Moshi could be used commercially in Japanese call centers, medical settings, and customer service.

Professor Ryuichiro Higashinaka, leader of the research team, worked as a corporate researcher at NTT for 19 years before becoming a professor at Nagoya University in 2020. At NTT, he was involved in the development of consumer dialogue systems and voice agents, including a project to develop a question-and-answer function for the voice agent service ' Shabette Concierge .'

Related Posts: