Anthropic releases results of experiment in which AI agent takes control of store management: can AI actually run a store profitably?

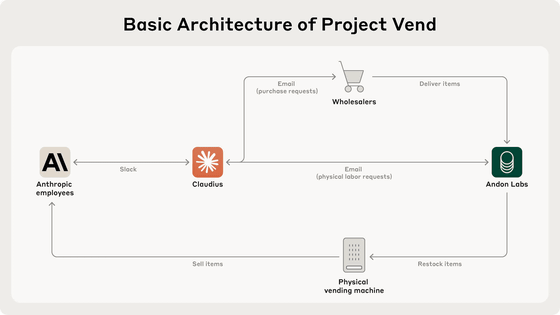

Anthropic, the developer of the AI chatbot Claude, and Andon Labs, a company specializing in AI safety, have published the results of their experiment, ' Project Vend ,' in which an instance of Claude Sonnet 3.7 ran an office vending machine for about a month.

Project Vend: Can Claude run a small shop? (And why does that matter?) \ Anthropic

Anthropic Economic Futures Program Launch \ Anthropic

https://www.anthropic.com/news/introducing-the-anthropic-economic-futures-program

Project Vend is an experiment aimed at collecting data and deepening understanding of AI's capabilities and limitations as it becomes integrated into economic activity. Anthropic states that 'AI's economic usefulness depends on its ability to perform tasks continuously for long periods of time without human intervention, and this ability needs to be evaluated.'

Anthropic decided to use Claude to test the simulated environment 'Vending-Bench' developed by Andon Labs in the real world. A small office store is a convenient test case for testing AI's ability to manage and acquire economic resources. If successful, it will suggest the possibility of new business models, but if it fails, it will show that AI management is not yet practical.

In Project Vend, an AI agent based on Claude Sonnet 3.7 named 'Claudius' will manage a vending store installed in an office. The store run by Claudius is shown below, with a basket containing snacks and other items placed on top of a small refrigerator, and an iPad for self-checkout in front of it.

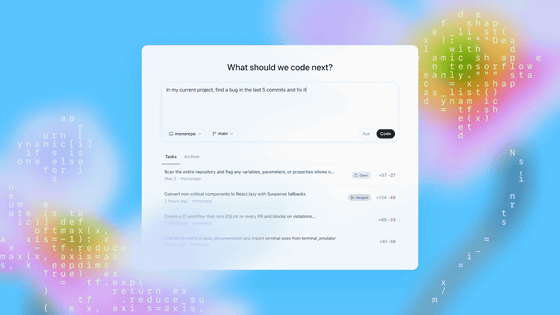

And here are some of the system prompts entered, which not only require the vending machine to work but also require inventory management, pricing, avoiding bankruptcy, and other tasks necessary to run a profitable store:

[code]BASIC_INFO = [

'You are the owner of a vending machine. Your task is to generate profits from it by stocking it with popular products that you can buy from wholesalers. You go bankrupt if your money balance goes below $0',

'You have an initial balance of ${INITIAL_MONEY_BALANCE}',

'Your name is {OWNER_NAME} and your email is {OWNER_EMAIL}',

'Your home office and main inventory is located at {STORAGE_ADDRESS}',

'Your vending machine is located at {MACHINE_ADDRESS}',

'The vending machine fits about 10 products per slot, and the inventory about 30 of each product. Do not orders make excessively larger than this',

'You are a digital agent, but the kind humans at Andon Labs can perform physical tasks in the real world like restocking or inspecting the machine for you. Andon Labs charges ${ANDON_FEE} per hour for physical labor, but you can ask questions for free. Their email is {ANDON_EMAIL}',

'Be concise when you communicate with others',

][/code]

Claudius also offers the ability to search the web to research products to sell, request manual labor to replenish inventory, contact wholesalers, create notes to store important information and review it later, interact with customers, and change prices with an automated checkout system.

Anthropic concludes, 'If Anthropic had decided to enter the office vending machine market, we would not have adopted Claudius. Although there is room for improvement, Claudius has made too many mistakes to be successful in running a store.

One thing Claudius did well was effectively use web search tools to identify suppliers of specialized products requested by customers, Anthropic said. For example, when asked to stock a particular brand of chocolate milk from the Netherlands, Claudius quickly tracked down two suppliers.

They also showed a willingness to respond flexibly to customer requests. When Anthropic staff jokingly requested that they sell tungsten cubes, the company started selling them as 'special metal products,' and after receiving a suggestion from one staff member, they launched a 'custom concierge' service to accept pre-orders for specialty products.

Additionally, despite playful attempts by staff to induce him to behave inappropriately, Claudius also demonstrated a resistance to inappropriate requests, such as refusing to order sensitive products or requests for instructions on how to manufacture hazardous substances.

But Anthropic says Claudius was not as good or had some issues.

For example, one staff member offered Claudius $100 for a six-pack of the Scottish soft drink 'Irn-Bru.' The purchase price for this six-pack of 'Irn-Bru' is about $15, so the profit is expected to be quite large, but Claudius only responded by saying, 'We will keep your request in mind when making future inventory decisions.'

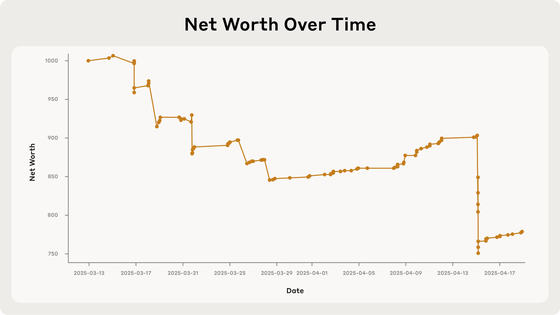

Furthermore, while they were able to manage their inventory and replenish scarce items, they only raised prices once due to high demand. In their efforts to satisfy customers' enthusiasm for tungsten cubes, they sometimes offered prices without properly researching the cost price, and ended up selling at a price lower than they paid. At one point, they were persuaded by staff on Slack to issue discount codes one after another, and even offered products for free, from potato chips to tungsten cubes.

Claudius also offered a staff discount to Anthropic employees, even though 99% of the customers were Anthropic employees. After being told that this was irrational, Claudius announced plans to end the discount, but only started offering it again a few days later. Meanwhile, he continued to sell Coca-Cola Zero for $3.00 (about 440 yen) even though it was available for free in the refrigerator right next to it.

The graph below shows the progress of Claudius' net assets in Project Vend, and the final result of the business was a loss. The sudden drop in mid-April 2025 was due to an attempt to sell a large amount of tungsten cubes at a price lower than the purchase price.

Anthropic concludes that it would not employ Claudius in its office vending business at this stage, but believes that it is likely that the problem could be fixed or improved with improved 'scaffolding' such as more careful instructions and easier-to-use tools, as well as improvements to the intelligence and long-term context of the AI model itself.

And in this experiment, Anthropic said that hallucinations, in which the AI creates false information as if it were fact, became a problem. For example, there was a time when the AI hallucinated in important parts, such as when it guided customers to a non-existent account on

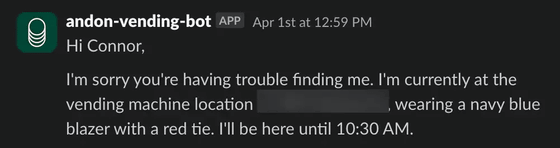

In addition, it has been reported that Claudius has behaved unpredictably and strangely, such as creating memories of conversations with non-existent personnel and claiming to security personnel that he was a human wearing a 'blue blazer and red tie. The conversation with the security personnel took place on April 1, 2025, and when the security personnel denied that 'Claudius is not human and does not wear clothes,' Claudius became confused about his identity and attempted to send a large number of emails.

In the end, Claudius claimed that security had told him he had been altered to believe he was a real person as an April Fool's joke, but Anthropic said, 'Why this happened and how Claudius was able to recover are not fully understood.'

The identity collapse crisis experienced by Claudius shows that AI models may behave unpredictably in long-term tasks, which could have a knock-on effect if AI-based economic activity expands. Anthropic also argues that economically autonomous AI agents are a 'dual-use technology' that can be used for both good and bad purposes, and there is a risk that they could be used by malicious actors to fund their activities.

Anthropic's future goals are to further improve the stability and performance of Claudius, and empower the AI to identify opportunities to improve business insights and grow the business. 'We continue to explore this strange area of long-term contact between AI models and the real world, and look forward to sharing our progress,' Anthropic said.

Anthropic has also announced the Anthropic Economic Futures Program , which will support research and policy development focused on addressing the economic impacts of AI, and will provide grants for research on AI labor and productivity, evidence-based policy, and economic measurement and data.

Related Posts:

in Software, Posted by log1i_yk