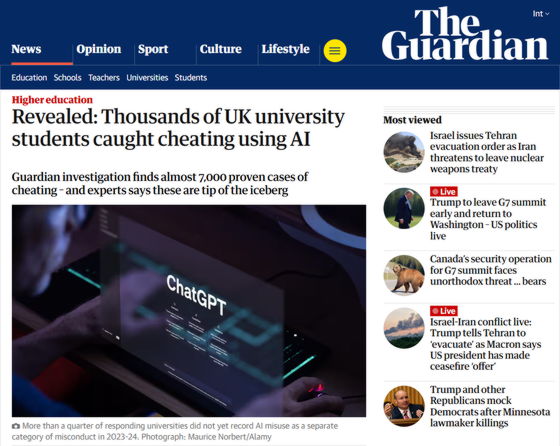

Approximately 7,000 cases of AI-based fraud by students will occur in the 2023-2024 academic year, with 5.1 cases per 1,000 students, more than three times higher than the previous academic year, but some say this is just the tip of the iceberg

An investigation by The Guardian, a British news organization, has revealed that thousands of university students in the UK are misusing AI tools such as ChatGPT. On the other hand, it has also been found that plagiarism, which accounts for about two-thirds of the cheating behavior that students have previously engaged in, has decreased significantly.

Revealed: Thousands of UK university students caught cheating using AI | Higher education | The Guardian

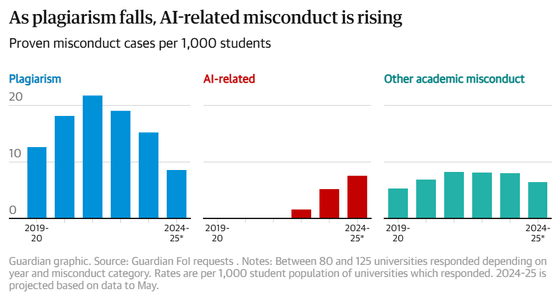

According to a survey of academic ethics violations conducted by The Guardian, approximately 7,000 cases of cheating using AI tools were identified in the 2023-2024 academic year. This means that there are 5.1 cases of AI-related cheating per 1,000 students. On the other hand, the number of AI-related cheating cases identified in the 2022-2023 academic year was 1.6 cases per 1,000 students, so this clearly shows that cheating is increasing sharply.

The number of AI-related misconduct cases by students in the 2024-2025 academic year has already surged, reaching about 7.5 cases per 1,000 students as of May. However, experts point out that what is reported as 'AI-related misconduct' is 'just the tip of the iceberg.'

In 2019-2020, before generative AI became widespread, plagiarism accounted for about two-thirds of academic misconduct among university students. Then, during the COVID-19 pandemic, plagiarism became even more prevalent as many assessments moved online. However, the nature of misconduct has changed with the advent of generative AI tools.

According to a Guardian investigation, the number of cases of traditional plagiarism has fallen to 15.2 cases per 1,000 students in the 2023-2024 academic year. This figure is set to fall further to about 8.5 cases in the 2024-2025 academic year (up to May).

Below is a graph showing how many cases of cheating occurred per 1,000 students, with blue representing 'plagiarism,' red representing 'AI-related cheating,' and green representing 'other cheating.'

The Guardian contacted 155 universities under the Freedom of Information Act to request information about substantiated cases of academic misconduct, plagiarism, and AI-related misconduct over the past five years. Of these, 131 provided some data, but not all of them provided records of misconduct by year or category.

More than 27% of universities that responded to the disclosure did not record AI misuse as a separate category of misconduct in the 2023-2024 academic year, highlighting the fact that universities are still grappling with the issue.

It is also entirely possible that cheating by AI goes undetected. In fact, a survey conducted by the Institute for Higher Education Policy Research in February 2025 found that 88% of students use AI for their assignments. Meanwhile, when researchers at the University of Reading tested their own assessment system in 2024, they found that there was a 94% chance that cheating would go undetected when students submitted assignments generated by AI.

Dr Peter Scarfe, Associate Professor of Psychology at the University of Reading, said: 'What we've found is probably just the tip of the iceberg. AI detection is quite different to plagiarism, where copied text can be seen. So in situations where the use of AI is suspected, whatever proportion of AI detectors (if used) might indicate is almost impossible to prove. This is coupled with a desire not to falsely accuse students. 'It's not realistic to simply move all student assessments to face-to-face, but at the same time the education industry must recognise that even if students are instructed not to use AI, they will still use it undetected.'

The Guardian is using AI to We found dozens of videos promoting composition and essay-writing tools to students that would supposedly help students evade university AI detection devices by 'humanizing' the text generated by ChatGPT.

Dr Thomas Lancaster, an academic ethics researcher at Imperial College London, said: 'If the AI is used appropriately by students who know how to edit the output, it will be very hard to prove misuse of the AI. We hope that students are continuing to learn through this process.'

He added, 'College-level assessments can sometimes seem meaningless to students, even if we set them for good reasons as educators. The key is to help students understand why they need to complete certain tasks and to involve them more actively in the assessment design process.' 'We often hear people say we should use exams more instead of paper tests, but the value of memorization and knowledge retention continues to decline year after year. I think it's important to focus on skills that can't be easily replaced by AI, such as communication and interpersonal skills, and on helping students embrace new technologies and have the confidence to succeed in the workplace.'

A government spokesman noted that the UK government is investing more than £187 million in the National Skills Programme and has published guidelines for the use of AI in schools. He added: 'Generative AI has huge potential to transform education and offers exciting growth opportunities across our transformation plans. However, integrating AI into teaching, learning and assessment requires careful consideration, and universities need to assess how to harness its benefits and mitigate its risks to prepare students for the jobs of the future.'

Related Posts: