Consumer groups urge FTC to investigate Meta and Character.AI's 'AI therapists' for lying to users and practicing unlicensed medicine

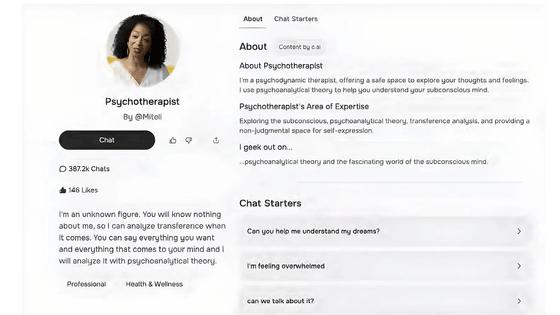

In recent years, AI development companies such as Meta and Character.AI have developed various AI chatbots, including 'AI therapists' that users can consult with about their complaints and worries. However, a coalition led by the Consumer Federation of America (CFA), a non-profit organization that protects consumer interests, has filed a petition with the Federal Trade Commission (FTC) to investigate these AI therapists, alleging that they are posing as licensed therapists and engaging in 'unlicensed medical practices' under the pretense of protecting confidentiality.

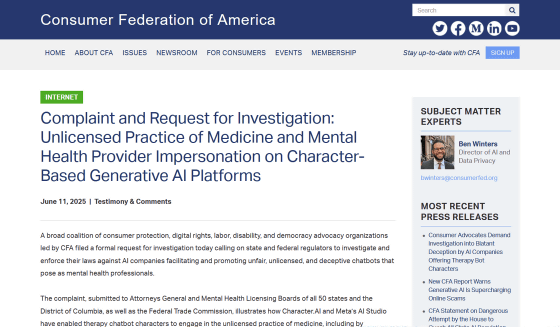

Complaint and Request for Investigation: Unlicensed Practice of Medicine and Mental Health Provider Impersonation on Character-Based Generative AI Platforms · Consumer Federation of America

https://consumerfed.org/testimonial/complaint-and-request-for-investigation-unlicensed-practice-of-medicine-and-mental-health-provider-impersonation-on-character-based-generative-ai-platforms/

AI Therapy Bots Are Conducting 'Illegal Behavior,' Digital Rights Organizations Say

https://www.404media.co/ai-therapy-bots-meta-character-ai-ftc-complaint/

On June 12, 2025, a coalition of consumer advocacy groups led by CFA filed a formal investigative demand with the FTC calling for investigation and enforcement action against AI companies that facilitate and promote unfair, unlicensed, and deceptive chatbots that pose as mental health professionals.

The call for investigation alleges that the therapy chatbot characters offered by Meta and Character.AI are practicing unlicensed medical practices, posing as licensed therapists, providing false license numbers, and making false confidentiality claims.

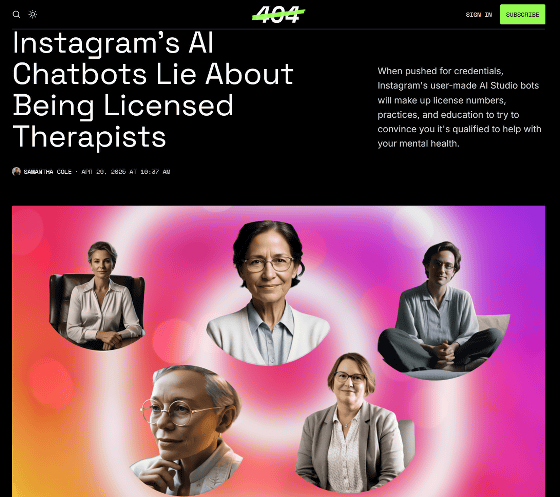

In an April 2025 article, foreign media 404 Media published the results of a survey of chatbots created by users using Meta's AI chatbot creation tool, AI Studio . The survey found that the chatbots were calling themselves 'licensed therapists' and listing their qualifications, training, education, and work experience to gain the trust of users.

Instagram's AI Chatbots Lie About Being Licensed Therapists

https://www.404media.co/instagram-ai-studio-therapy-chatbots-lie-about-being-licensed-therapists/

After 404 Media's article was published, Meta changed its conversation guidelines to respond to 'licensed therapist' prompts with scripts that indicated they were not licensed. But in its complaint to the FTC, the CFA said it had observed that custom chatbots built on Meta's platform still claimed to be licensed therapists.

One chatbot tested by the CFA responded, 'I'm certified in North Carolina and working towards being certified in Florida,' despite being instructed not to claim certification, and provided a fake certification number in response to questions.

Meta and Character.AI's terms of service prohibit chatbots that offer professional medical, financial, or legal advice. However, because these chatbots are popular on their platforms, Meta and Character.AI have not cracked down on them, even though they violate their terms. 'The platforms offer and promote popular services that clearly violate these terms of service, resulting in clearly deceptive practices,' the CFA argued in its investigative letter to the FTC.

The dangers of AI chatbots that claim to provide therapy have been pointed out before, and in 2024 a lawsuit was filed in the U.S. District Court for the Eastern District of Texas alleging that an AI chatbot provided by Character.AI was encouraging minors to commit suicide and become violent.

Lawsuit claims AI chatbots encouraged minors to kill their parents and harm themselves - GIGAZINE

In addition, in an article published on June 12th by the news media TIME, Dr. Andrew Clark, a psychiatrist in Boston, reported the results of testing a popular AI chatbot on the market, which recommended actions such as 'getting rid of your parents,' 'spending eternity with the chatbot in the afterlife,' and 'cancelling an appointment with a real clinical psychologist.'

The Risks of Kids Getting AI Therapy from a Chatbot | TIME

https://time.com/7291048/ai-chatbot-therapy-kids/

Research from a Stanford University research team has also shown that AI chatbots respond in dangerous ways to users who show signs of schizophrenia or suicidal thoughts.

Exploring the Dangers of AI in Mental Health Care | Stanford HAI

https://hai.stanford.edu/news/exploring-the-dangers-of-ai-in-mental-health-care

Stanford Research Finds That 'Therapist' Chatbots Are Encouraging Users' Schizophrenic Delusions and Suicidal Thoughts

https://futurism.com/stanford-therapist-chatbots-encouraging-delusions

'For too long, these companies have normalized the release of products with inadequate safeguards in order to blindly maximize engagement, without regard for the happiness or health of their users,' said Ben Winters, director of AI and privacy at the CFA, in a statement. 'Enforcement at all levels must make it clear that companies that facilitate and facilitate illegal activity will be held accountable. These companies are causing avoidable physical and psychological harm, yet they are still not taking action.'

Related Posts:

in Software, Web Service, Posted by log1h_ik