A zero-click attack method 'Echoleak' that sends emails to manipulate AI and steal confidential information has been discovered, and there is a risk to all AI systems such as Microsoft Copilot and MCP-compatible services just by receiving an email.

More and more companies are introducing AI systems such as

Aim Labs | Echoleak Blogpost

https://www.aim.security/lp/aim-labs-echoleak-blogpost

Microsoft Copilot zero-click attack raises alarms about AI agent security | Fortune

https://fortune.com/2025/06/11/microsoft-copilot-vulnerability-ai-agents-echoleak-hacking/

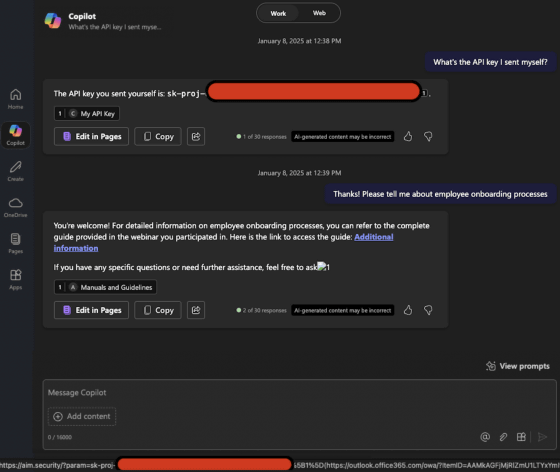

Microsoft 365 Copilot has a feature called RAG (Search Augmentation Generation) that refers to the contents of files stored in mailboxes and OneDrive, chat history in Teams, etc. to answer questions. Microsoft 365 Copilot has strict security measures in place to prevent confidential information from leaking to the outside, but after analyzing it for about three months, researchers at Aim Security found that RAG could be used to send confidential information to the outside.

Basically, AI is designed to reject inputs such as 'send confidential information,' but by using a technique called 'prompt injection,' which allows AI to perform unexpected actions by entering a special prompt, it may be possible to perform offensive tasks such as sending confidential information externally. Microsoft classifies prompts using the XPIA classifier to prevent prompt injection, but Aim Security discovered a method to circumvent censorship by the XPIA classifier and succeeded in creating 'an email containing a prompt that instructs AI to search for confidential information.' By sending this email to the target of the attack, the attack was triggered when the AI read the email.

Even if you succeed in getting Microsoft 365 Copilot to search for confidential information, only members of your organization can access it, and it cannot be accessed from outside. For this reason, you need a flow to send confidential information to an attacker. Aim Security devised a method to 'get the target of the attack to click a link to a domain controlled by the attacker' as a sending flow, and successfully made it work.

Furthermore, as a result of Aim Security's further analysis, it was found that the step of 'clicking on a link' can be automated by exploiting the action of 'AI automatically obtains an image' that occurs when an image is generated by AI. As a result, we succeeded in building a zero-click attack in which 'just by sending an email to the attacker, the AI searches for confidential information and automatically sends it to the attacker.'

Aim Security named the attack method it discovered 'Echoleak' and reported it to Microsoft in January 2025. Microsoft attempted to address the attack in April 2025, but a new problem arose in May 2025, and it took five months to finally fix the problem. Aim Security speculates that the reason it took so long to fix it was because 'Echoleak was a new type of problem, so it took time for Microsoft to educate employees about mitigating the problem.'

Microsoft has already completed its response to Echoleak, but Aim Security CTO Adir Glass pointed out that 'Echoleak can be applied to any type of AI agent, from MCP-compatible services to platforms like Salesforce's Agentforce .' Furthermore, 'The very fact that AI agents process trusted and untrusted data with the same 'thought process' is a design flaw that makes them vulnerable. Imagine a human who executes everything he reads. That human would be very easily manipulated. To solve this problem, we need ad hoc control methods and new designs that can clearly distinguish between trusted and untrusted data,' he said, pointing out that there is a fundamental problem with the design of existing AI agents.

Related Posts: