What if human brains were bigger?

The human brain is considerably larger than other living creatures, and

What If We Had Bigger Brains? Imagining Minds beyond Ours—Stephen Wolfram Writings

https://writings.stephenwolfram.com/2025/05/what-if-we-had-bigger-brains-imagining-minds-beyond-ours/

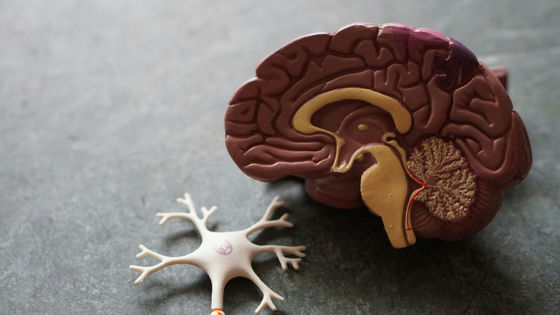

The human brain has about 100 billion neurons, and it is believed that many neurons are necessary to use the structural language that humans use. There are no creatures with more neurons than humans, but it is possible that AI with substantially more neurons will emerge. According to Wolfram, imagining 'if the human brain were larger' is useful for exploring the development of AI and the nature of basic computation. In fact, Google researchers claim that there is a remarkable similarity between the internal embedding of large-scale language models and the neural activity patterns when the human brain processes speech.

Google researchers claim that the processing order of large-scale language models is similar to neural activity in the human brain - GIGAZINE

Wolfram first pointed out that 'having a larger brain has an impact on the languages we can use.' All human languages are thought to have about 30,000 common words , and the fact that this number is roughly consistent across different languages is thought to be due to the size of the human brain.

Our daily lives can be described as they are using the 30,000 common words. However, this includes more general or abstract descriptions, such as grouping 'tigers' and 'lions' together as 'felines.' If human brains were larger and the number of languages they could handle increased significantly, we would be faced with things that could not be explained by current concepts. In other words, if AI acquired the computing power equivalent to more neurons than humans, it could ' emerge ' concepts that humans cannot understand.

It is not yet clear to what extent the commonalities between languages are due to a shared history, and to what extent they are due to the peculiarities of human sensory experience of the world or the structure of the brain, but human languages commonly contain ambiguity, Wolfram says. According to Wolfram, the ambiguity is limited by the number of neurons, so if the brain were larger, it would be possible to directly treat words as 'clear concepts,' while the 'grammatical ability' to combine words to communicate roughly would be shallow.

Wolfram describes the brain's role as 'translating a huge amount of sensory input into a small number of decisions.' This is the role of

Furthermore, Wolfram said that larger brains can handle 'higher-level abstractions.' Basic perception involves a degree of abstraction, filtering the world rather than capturing it as it is. Abstraction is the act of summarizing or reducing something, which effectively means 'moving forward,' Wolfram said, but complex abstractions have conceptual challenges, and the human brain cannot 'reach the final answer.' However, a larger brain can 'reach further' in the network of abstractions. Wolfram said, 'Higher-level abstractions should be about things we've never thought about before, about the laws of physics and the truth of the universe.'

In summary, as our brains get bigger, our computational capabilities increase, and so does the number of concepts we can distinguish and remember from input information. As a result, even in language, where we convert sensations into 'tokens' to understand them, the range of concepts we can process increases, making it possible to think and communicate in a structured, visual format that handles 'raw concepts' without tokenization. In particular, we can expect a clear qualitative improvement in abstraction ability as the brain gets bigger.

Neural networks are basically just calculating, they don't 'work like a mind'. However, if we ask neural networks to perform typical 'brain-like tasks', humans may not be able to understand the calculations, abstractions, and languages that neural networks with higher computational power than the human brain in the future use. Wolfram said, 'To fully understand what a mind beyond the human mind can do, we will need to advance our entire intellectual system.'

Related Posts: