Results of 'MLPerf Training v5.0', which measures the training performance of AI infrastructure, are released, NVIDIA is twice as fast as the previous generation & AMD surpasses NVIDIA in some tests

New MLCommons MLPerf Training v5.0 Benchmark Results Reflect Rapid Growth and Evolution of the Field of AI - MLCommons

https://mlcommons.org/2025/06/mlperf-training-v5-0-results/

NVIDIA Blackwell Delivers Breakthrough Performance in Latest MLPerf Training Results | NVIDIA Blog

https://blogs.nvidia.com/blog/blackwell-performance-mlperf-training/

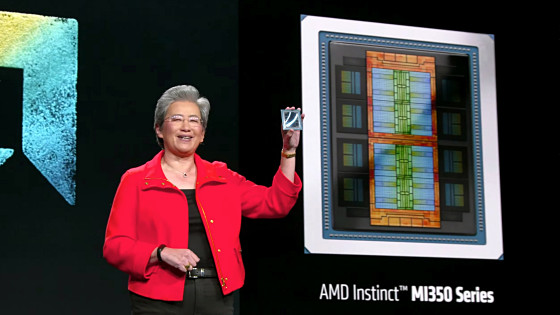

AMD Expands AI Momentum with First MLPerf Training Submission

https://www.amd.com/en/blogs/2025/amd-drives-ai-gains-with-mlperf-training-results.html

AI processing chips are being developed by multiple companies, including NVIDIA, AMD, and Intel, and AI infrastructure equipped with these AI chips is being deployed by vendors such as Dell and Oracle. MLCommons develops benchmark tools that can measure the inference and training performance of AI infrastructure, and collects and publishes benchmark results from chip development companies and vendors. The results announced this time are those of 'MLPerf Training v5.0,' which measures training performance.

MLPerf Training includes several types of tests, such as 'Measurement of the time it takes to train a large-scale language model' and 'Measurement of the time it takes to additionally learn a large-scale language model'. MLCommons updates the test content according to the progress of AI, and in MLPerf Training v5.0, the test 'Measurement of the time it takes to train Llama 3.1 405B' has been introduced instead of the 'Measurement of the time it takes to train GPT-3' that was adopted in the previous version.

The graph below compares the scores of MLPerf Training v4.1 (blue) and MLPerf Training v5.0 (turquoise) measured in November 2024. The vertical axis shows the time it takes to train each model, with shorter bars indicating higher performance. The processing performance of the AI infrastructure has improved significantly in half a year, with Stable Diffusion's learning speed improving by 2.28 times and Llama 2 70B's learning speed improving by 2.10 times.

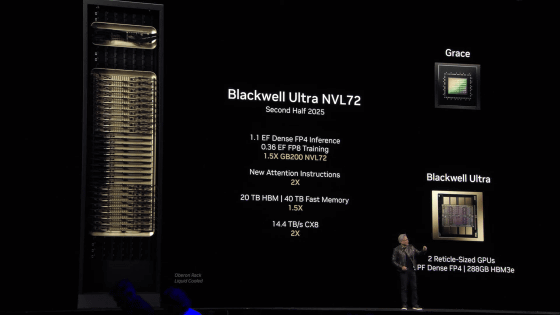

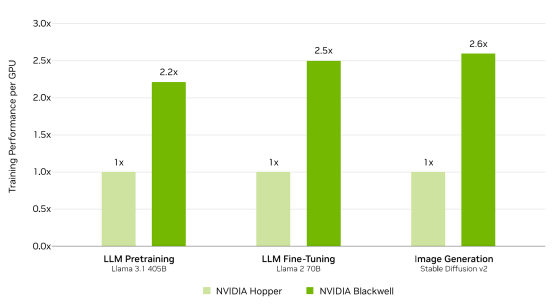

NVIDIA has published a graph comparing the test results of AI infrastructure equipped with Hopper and Blackwell generation AI chips, showing that Blackwell has more than twice the performance of Hopper. NVIDIA is also the only company that submitted MLPerf Training v5.0 scores to submit test results in all categories.

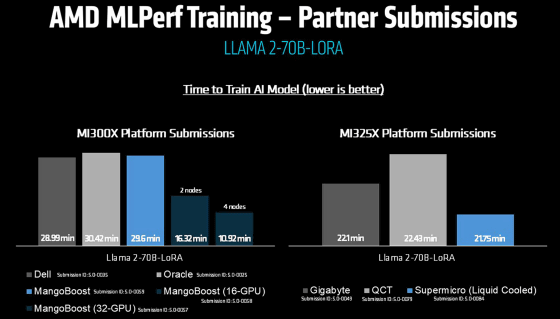

AMD presented the results of tests on the time it takes to perform additional LoRA learning on the Llama 2 70B, and boasted that its

The Instinct MI325X was also up to 30% faster than the Instinct MI300X.

In addition, AMD compared test results from multiple vendors using its own AI chips to emphasize that it can deliver consistent performance.

The results of MLPerf Training v5.0 are available at the following link.

Benchmark MLPerf Training

https://mlcommons.org/benchmarks/training/

Related Posts:

in Hardware, Posted by log1o_hf