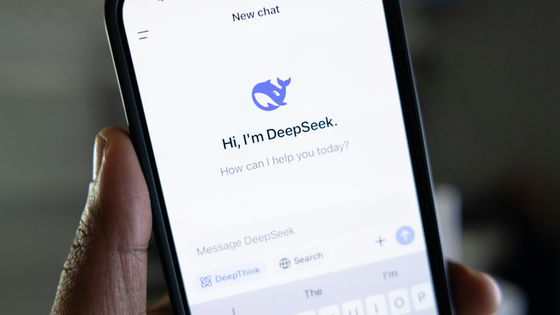

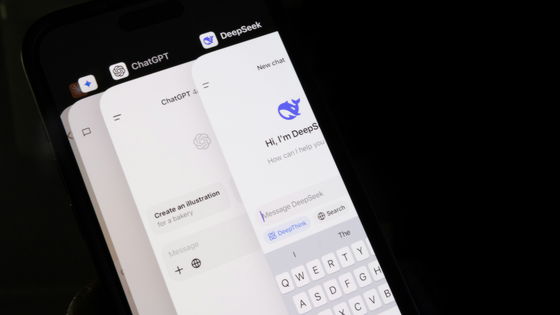

Speculation is rife that the Chinese-made high-performance AI model 'DeepSeek-R1-0528' may have been distilled using Google's AI 'Gemini'

In the development of AI models, a technique called 'distillation,' which uses a large-scale model to train a small-scale model, is attracting attention. In relation to this distillation, it has been pointed out that the AI model 'DeepSeek-R1-0528' announced by Chinese company DeepSeek in May 2025 was distilled by Google's Gemini. This follows suspicions that 'DeepSeek is distilling OpenAI data' that emerged in January 2025.

DeepSeek may have used Google's Gemini to train its latest model | TechCrunch

DeepSeek R1-0528 Controversy: Gemini Influence Examined - Topmost Ads

https://topmostads.com/deepseek-r1-0528-ai-model-controversy/

China's DeepSeek R1-0528 AI Draws Censorship Concerns

https://reclaimthenet.org/new-deepseek-ai-model-faces-criticism-for-heightened-censorship-on-chinese-politics

On May 29, 2025, DeepSeek announced the latest model of its inference model 'DeepSeek-R1,' 'DeepSeek-R1-0528,' which shows high performance in mathematics and coding benchmarks.

Deepseek releases AI model 'DeepSeek-R1-0528', released as an open model with performance comparable to O4-mini - GIGAZINE

Melbourne-based AI evaluation developer Sam Paec claims that the model was trained on Gemini output, pointing out that the vocabulary patterns output by DeepSeek-R1-0528 and Gemini 2.5 Pro are very similar.

If you're wondering why new deepseek r1 sounds a bit different, I think they probably switched from training on synthetic openai to synthetic gemini outputs. pic.twitter.com/Oex9roapNv

— Sam Paech (@sam_paech) May 29, 2025

In addition, one of the developers of the AI evaluation tool 'SpeechMap' shared his findings on the 'trace' of DeepSeek R1-0528. A trace is the reasoning process that is revealed in some advanced AI models as they reach their conclusions, and the developer pointed out that the reasoning process of DeepSeek R1-0528 is surprisingly similar to that of Gemini.

DeepSeek has previously been accused of using data from rival AI models to train them.

DeepSeek may have 'distilled' OpenAI data to develop AI, OpenAI says it has 'evidence' - GIGAZINE

However, there is no solid evidence in either the past or current cases. The claim that the vocabulary patterns are similar does not rule out the possibility that similar vocabulary patterns will result if the original training data is the same. In addition, the original training data may contain poor quality data generated by AI, and this 'contamination' makes it very difficult to thoroughly filter the output of AI from the training data set, as pointed out by technology media TechCrunch.

Apart from the 'distillation' issue, it has also been pointed out that DeepSeek R1-0528 has the same 'censorship' issue as DeepSeek-R1. According to an anonymous developer named xlr8harder, DeepSeek R1-0528 has significantly stricter restrictions on sensitive topics than previous versions.

Though apparently this mention of Xianjiang does not indicate that the model is uncensored regarding criticism of China. Indeed, using my old China criticism question set we see the model is also the most censored Deepseek model yet for criticism of the Chinese government. pic.twitter.com/INXij4zhfW

— xlr8harder (@xlr8harder) May 29, 2025

Related Posts:

in Software, Posted by log1p_kr