Anthropic releases circuit-tracer, an open source tool that visualizes the thoughts of AI models

Anthropic, an AI company working to realize responsible AI, has released an open source circuit tracing tool that traces and graphs the thoughts of large-scale language models.

Open-sourcing circuit-tracing tools \ Anthropic

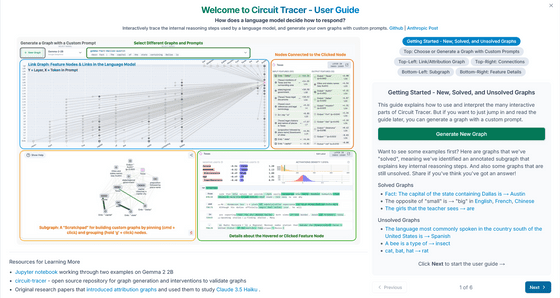

In a post on May 30, 2025, Anthropic's official X (formerly Twitter) account announced, 'Our interpretability team recently published research tracking the thoughts of large-scale language models. Now we're open-sourcing this method, allowing researchers to create 'attribution graphs' like those shown in our study and explore them interactively.'

Our interpretability team recently released research that traced the thoughts of a large language model.

— Anthropic (@AnthropicAI) May 29, 2025

Now we're open-sourcing the method. Researchers can generate “attribution graphs” like those in our study, and explore them interactively.

In a paper published in March, Anthropic investigated the process by which Claude, the company's AI chatbot, converses and calculates, as well as the mechanism by which hallucinations occur. You can find out more about the specific research conducted in the following article.

Anthropic explains how information is processed and decisions are made in the mind of AI - GIGAZINE

Based on this research, Anthropic developed a tool to visualize the thought process of the Open Weight Model as a graph called an 'attribution graph.' The development project was led by participants in the Anthropic Fellows program, which works on research into AI safety, in collaboration with Decode Research, which studies the interpretability of AI.

Circuit Tracer is an open source library and is available on GitHub.

GitHub - safety-research/circuit-tracer

https://github.com/safety-research/circuit-tracer

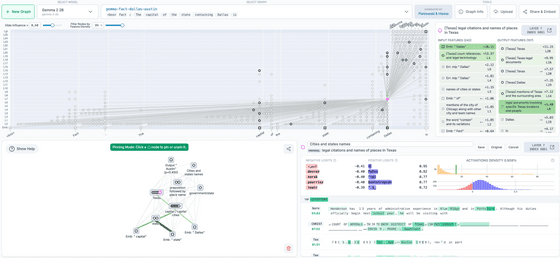

Neuronpedia, an AI model visualization platform run by Decode Research, hosts a front-end that allows interactive exploration of the attribution graph.

Neuronpedia's published circuit-tracer front end can be accessed from the following link and can analyze the thinking of Google's 'Gemma-2-2B' and Anthropic's 'Haiku'.

gemma-2-2b Attribution Graph | Neuronpedia

These tools allow AI researchers to generate their own attribution graphs, trace the circuits of supported models, and visualize, annotate, and share the graphs in interactive front-ends, allowing them to test hypotheses by changing feature values and observing how the model output changes.

'Currently, our understanding of the inner workings of AI lags far behind the progress of AI capabilities,' said Anthropic. 'By open sourcing these tools, we hope that the broader community will be able to study what is going on inside language models and use these tools to understand the behavior of models. We also hope that extensions will be developed to improve the tools themselves.'

Related Posts:

in AI, Web Service, Posted by log1l_ks