Deepseek releases AI model 'DeepSeek-R1-0528', released as an open model with performance comparable to O4-mini

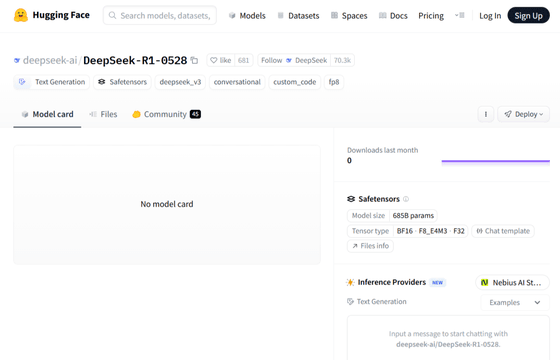

Chinese AI company DeepSeek announced on Chinese SNS WeChat that it has released a minor update to its inference AI model DeepSeek R1, ' DeepSeek-R1-0528 '. The Hugging Face repository does not contain a description of the model, only the configuration files and weights, which are the internal components that guide the model's behavior.

deepseek-ai/DeepSeek-R1-0528 · Hugging Face

https://huggingface.co/deepseek-ai/DeepSeek-R1-0528

The minor update 'DeepSeek-R1-0528' has 685 billion parameters, which is slightly heavier. The update mainly improves inference capabilities, with features such as 'deep inference like the Google model,' 'improvements to code generation tasks,' 'a unique inference style that is not only fast but also thoughtful,' and 'long thinking sessions of up to 30 to 60 minutes per task.'

R1-0528 feels... aware????

— Chetaslua (@chetaslua) May 28, 2025

???? New DeepSeek R1-0528 Update Highlights:

• ???? now reasons deeply like Google models

• ✍️ Improved writing tasks – more natural, better formatted

• ???? Distinct reasoning style – not just fast, but thoughtful

• ⏱️ Long thinking sessions – up to… pic.twitter.com/mkeQVIWlQk

DeepSeek-R1-0528 has already been ranked on LiveCodeBench , which benchmarks a wide range of coding including code generation and repair, code execution, and output prediction. DeepSeek-R1-0528's ranking (August 1, 2024 to May 1, 2025) is fourth at the time of writing, with a score showing performance almost on par with OpenAI's o4-mini (medium).

R1-0528 is vastly more comfortable *being*

— Teortaxes▶️ (DeepSeek Advice???? Iron powder 2023 – ∞) (@teortaxesTex) May 28, 2025

New vs old: https://t.co/ejtIxjo8DB pic.twitter.com/mpjpyFawwH

Below is a video of DeepSeek-R1-0528 reading and summarizing the paper ' Attention Is All You Need, ' which presented the Transformer architecture.

DeepSeek R1 0528 can understand some details in the article, and the answers are more logical, comprehensive and complete!

— Vincent (@AwadbVincent) May 28, 2025

Try freely in Zotero by the plugin PapersGPT( https://t.co/WTk5KA7JVa ) to read papers with the newest DeepSeek model DeepSeek R1 0528. pic.twitter.com/XD2aPbMGXD

Deepseek-R1-0528 is released under the MIT license, and anyone can obtain the model data for free.

Continued

Speculations are rife that the Chinese-made high-performance AI model 'DeepSeek-R1-0528' may have been distilled using Google's AI 'Gemini' - GIGAZINE

Related Posts: