Anthropic accused of using a paper on AI-generated 'hallucinations' as a source in court filings; Anthropic's lawyers say it was a 'simple citation error'

It has been pointed out that Anthropic, the developer of the AI assistant 'Claude,' has tried to cite non-existent research papers to bolster its claims in a copyright lawsuit against major music companies such as Universal Music Group (UMG).

Anthropic expert accused of using AI-fabricated source in copyright case | Reuters

Did Anthropic's own AI generate a 'hallucination' in legal defense against song lyrics copyright case? - Music Business Worldwide

https://www.musicbusinessworldwide.com/did-anthropics-own-ai-generate-a-hallucination-in-legal-defense-against-song-lyrics-copyright-case/

Anthropic's lawyer was forced to apologize after Claude hallucinated a legal citation | TechCrunch

https://techcrunch.com/2025/05/15/anthropics-lawyer-was-forced-to-apologize-after-claude-hallucinated-a-legal-citation/

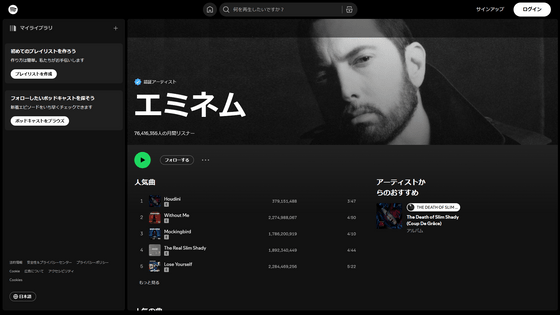

UMG, Concord Music, an independent music company that distributes through UMG, and ABKCO filed an amended complaint on April 25, 2025, alleging that Anthropic used copyrighted lyrics without permission to train its AI, 'Claude.'

In response, Olivier Chen, a data scientist at Anthropic, filed a counterargument on April 30, citing a paper published in the academic journal The American Statistician, to argue for the validity of the sample size of how frequently users prompt Claude to print copyrighted lyrics.

Anthropic's argument is that it is an extremely rare occurrence for users to request lyrics from Claude.

However, when Matt Oppenheim, the plaintiffs' attorney, contacted the people listed as authors of the paper and The American Statistician, he discovered that no such paper existed.

'I don't believe Chen intended to lie, but I do believe he may have had some hallucinations when he used Claude to write the documents,' Oppenheim told Reuters.

'Hallucination' refers to the output of false information by AI and the false information output, and was selected as the Cambridge Dictionary's Word of the Year in 2023.

'Hallucination' selected as 2023 Word of the Year, adding new meaning for generative AI - GIGAZINE

Anthropic's lawyer, Sai Damle, defended the case, saying there was a 'citation error,' but denied that the AI had fabricated the images. He argued that 'the plaintiffs are delaying their complaint and using us as a punching bag.'

However, Judge van Keulen, who is presiding over the case, disagreed with Damle's argument, saying there is a big difference between an oversight of a document and a hallucination generated by an AI.

By the way, in May 2025, a judge in California in the United States accused two law firms of submitting false AI-generated research results to the court. Also, in January 2025, a case was reported in Australia where a lawyer used AI to create court documents and mixed in 'hallucinations.'

Related Posts:

in Note, Posted by logc_nt