Google Announces Agent2Agent, an Interoperability Protocol for Enabling Collaboration Between AI Agents

There are many 'AI agents' that can perform tasks autonomously, such as making restaurant reservations at the user's command. Google has announced a new protocol called 'Agent2Agent (A2A)' that enables interoperability between AI agents with completely different designs. A2A is positioned as a complement to the protocol '

Announcing the Agent2Agent Protocol (A2A) - Google Developers Blog

https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/

Home

https://google.github.io/A2A/#/

'AI agents' are tools that can autonomously handle routine or complex tasks, and can take highly specialized actions such as providing customer support for companies or making hotel reservations. For example, Google has designed and is offering an AI agent called ' Project Mariner ' that can automatically operate Chrome, while Salesforce has designed and is offering an AI agent called ' Agentforce ' that can manage customers.

As each company designs their own AI agents, it is difficult to have the AI agents exchange information with each other and operate smoothly. A2A is being introduced to solve this problem.

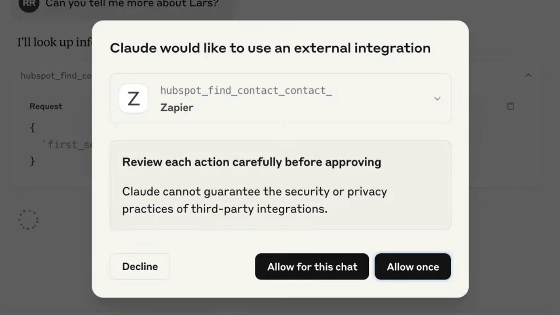

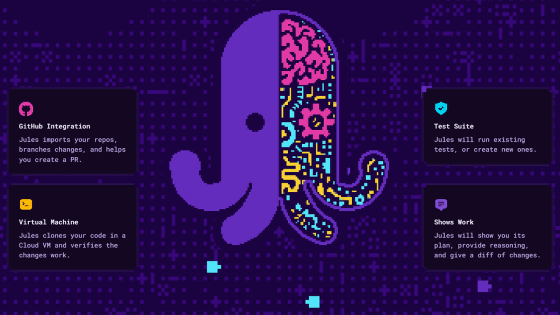

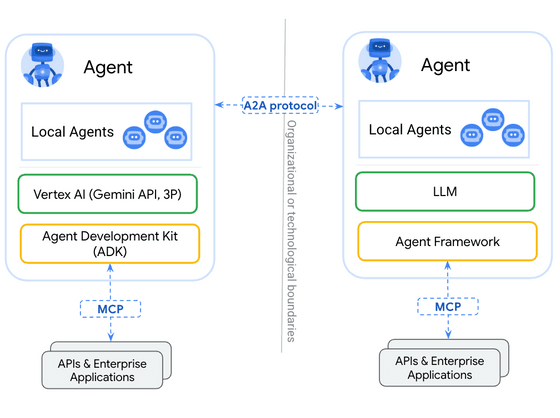

A2A is a protocol that facilitates communication between 'client agents' and 'remote agents.' Using A2A, a client agent, i.e. an AI agent to which a user first gives instructions, can find a remote agent that can correctly carry out the user's instructions, provide it with information, and return information obtained from the remote agent to the user.

Remote agents advertise their capabilities using JSON-formatted 'agent cards.' For example, they may say they are best suited for talent scouting or interview scheduling. When the client agent receives this information, they determine which remote agent is best suited to the user's instructions and communicate the information by exchanging messages with each other. During this process, they may 'negotiate' with each other to solve the task, such as 'I, as a remote agent, can display images and tables, but is this necessary?'

Below is a demo video shown by Google.

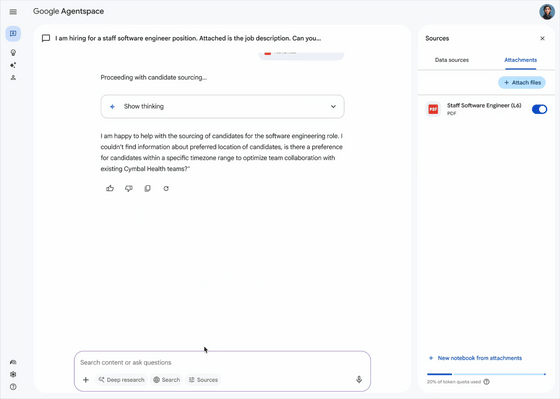

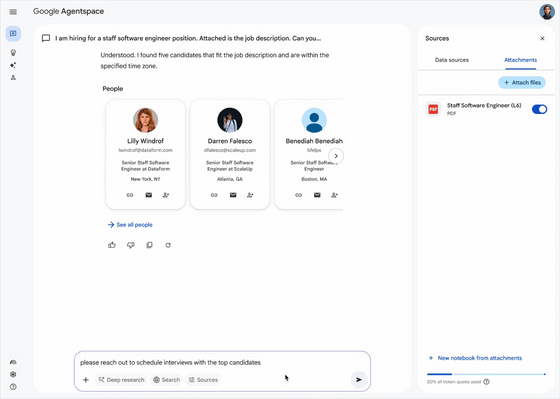

The chat screen shown here is from Google's AI agent, Agentspace . The user is attaching a file that describes the job they are doing to help the agent find software engineers who fit the criteria.

Agentspace, the client agent, searches for an AI agent that fits the criteria and determines that an agent called “Sourcing Agent” is the best fit.

The Sourcing Agent who receives the message from Agentspace will say, 'We're happy to help you,' and ask the user for more information.

Once the Sourcing Agent receives the additional information from the user, it thinks, 'I can send this as plain text or I can display it as a friendly card using an iframe embed. I'll negotiate with the client agent if they support iframes. They do, so let's display it as a card.'

And then I displayed the information as a card like this:

The unique feature of this system is that all of the above processing is carried out on Agentspace, allowing users to carry out tasks smoothly without having to switch between multiple AI agent screens.

A2A is positioned as a complement to the MCP protocol, which acts as a bridge between AI and external data sources. The roles of MCP and A2A differ as follows:

MCP: Connects AI agents to external tools, APIs, and resources

A2A: Connecting different AI agents

A2A is supported by more than 50 companies, including Atlassian, Salesforce, and PayPal. It is open to the public as an 'open protocol' and is expected to be widely used. The official version is scheduled to be released in the second half of 2025.

Related Posts:

in Software, Posted by log1p_kr