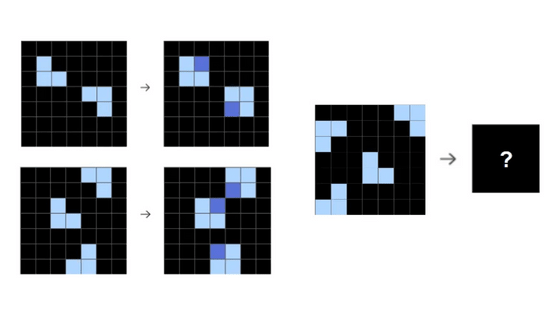

'ARC Prize - Play the Game' allows you to play game tasks that are 'easy for humans but difficult for AI' for free

A new version of the benchmark ' ARC (Abstraction and Reasoning Corpus)-AGI ', designed to measure the abstract reasoning ability of AI, ' ARC-AGI-2 ' has been released. ARC-AGI-2 consists of tasks that are easy for humans but difficult for AI, and the tasks actually adopted in ARC-AGI-2 can be played in a web browser.

ARC Prize - Play the Game

Announcing ARC-AGI-2 and ARC Prize 2025

https://arcprize.org/blog/announcing-arc-agi-2-and-arc-prize-2025

The ARC Prize is a competition held on Kaggle, one of the world's largest online community platforms for data science and artificial intelligence, in which participants are required to develop efficient and effective solutions within the constraints of approximately $50 (approximately 7,500 yen) worth of computing resources. The first team to achieve a score of 85% or more will receive a grand prize of $700,000 (approximately 105 million yen), and the team with the highest score will receive a top score prize of $75,000 (approximately 11.3 million yen).

The ARC Prize is a contest that will award a total of $1 million to researchers who develop general artificial intelligence with human-level intelligence.

The assignments are open to the public, and you can actually solve them to check the content. Go to the play page and click 'Start'.

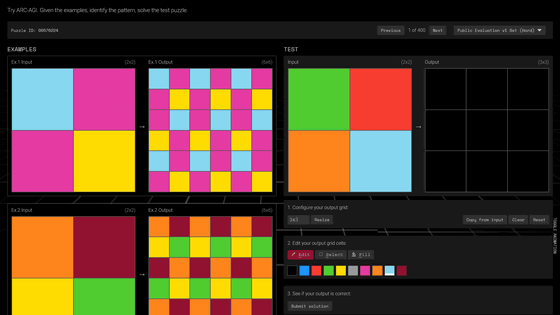

The tasks displayed change daily. Refer to the 'EXAMPLES' on the left for the input and output, and answer the appropriate output in 'TEST' on the right.

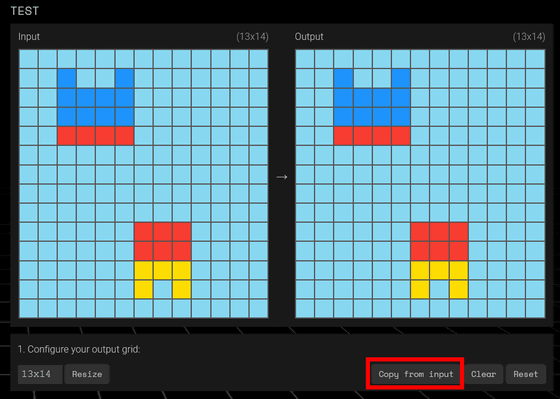

First, enter the grid and click 'Resize'.

By clicking “Copy from Input”, you can copy the contents of Input directly to Output.

Once you have answered, click 'Submit solution.'

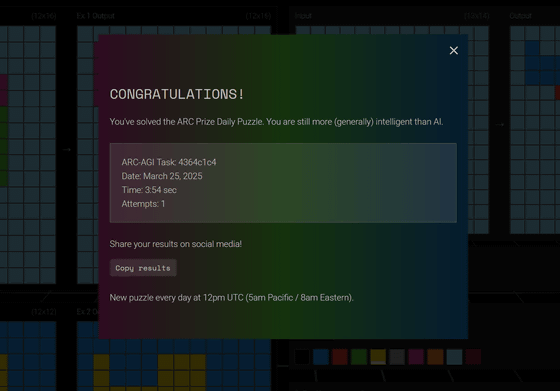

Brilliantly correct.

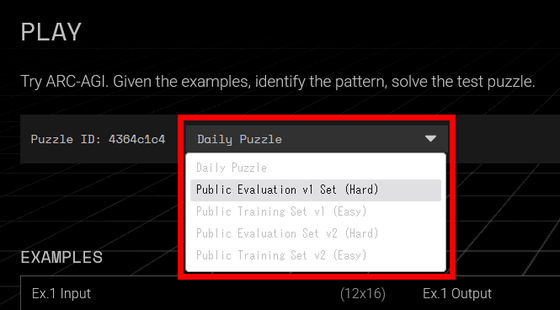

You can also challenge other challenges by selecting the pull-down menu next to the 'Puzzle ID.' Challenges are divided by difficulty level.

ARC-AGI-2 consists of 120 public evaluation tasks, 120 semi-private evaluation tasks, 120 private evaluation tasks, and 1,000 training tasks. The core of ARC-AGI-2 is not simply memory or application of existing knowledge, but rather the measurement of 'fluid intelligence' that adapts to new situations. Tasks are set to identify the meaning of symbols, the simultaneous application of multiple rules, and the appropriate application of rules according to the context. All tasks have been confirmed to be solvable by at least two humans within two attempts, but pure large-scale language models have achieved 0% scores, and even the most advanced inference AI systems have only achieved a score of a few percent.

In addition, the uniqueness of ARC-AGI-2 is that it focuses not only on whether the problem can be solved, but also on its efficiency. In fact, the ARC Prize defines AGI as 'when the gap between problems that are easy for humans but difficult for AI becomes zero.' Therefore, the cost efficiency of solving the tasks is also measured and compared with the human computational cost of about $17 (about 2,500 yen) per task obtained from the results of the experiment.

Related Posts:

in Review, Software, Web Application, Posted by log1i_yk