It turns out that North Korean hackers were trying to use OpenAI's AI to 'create attack code' and 'create fraudulent emails'

OpenAI published a report on the use of AI by malicious users on February 21, 2025. According to the report, North Korean cybercrime groups were using OpenAI's AI to ask how to code malware and to create social media posts to falsely claim backgrounds.

Disrupting malicious uses of AI | OpenAI

OpenAI bans ChatGPT accounts used by North Korean hackers

https://www.bleepingcomputer.com/news/security/openai-bans-chatgpt-accounts-used-by-north-korean-hackers/

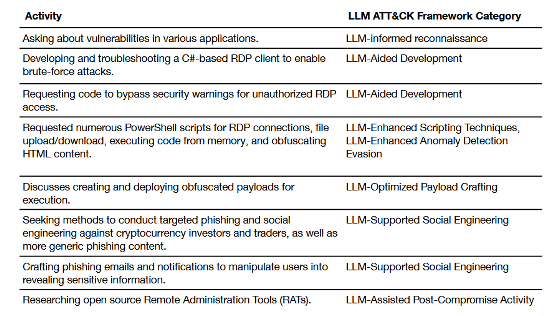

OpenAI has discovered and suspended several accounts believed to be linked to North Korean threat actors. The accounts have been linked to North Korean cybercrime groupsVelvet Chollima (aka Kimski, Emerald Sleet, etc.) and Stardust Chollima (aka APT38, Sapphire Sleet, etc.) .

Below are some of the malicious activities that North Korean cybercrime groups have carried out using OpenAI's AI tools. These include 'generating code to circumvent the security features of remote desktop clients,' 'searching for ways to attack cryptocurrency investors,' and 'creating phishing emails to reveal confidential information.'

While analyzing the behavior of cybercrime groups, OpenAI discovered 'staging URLs for unknown attack binaries.' OpenAI sends the discovered URLs to security scanning services, and many security software programs are already compatible with the binaries.

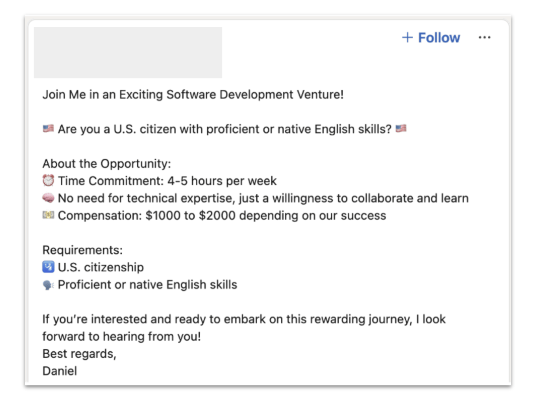

OpenAI also discovered that North Korean users were creating social media posts for job searches, posing as 'job-seeking users from other countries.' Below is an example of a social media post that is thought to have been created by a North Korean user using OpenAI's AI. These posts are thought to be part of North Korea's attempt to earn foreign currency .

The full OpenAI report can be found at the following link:

Disrupting malicious uses of our models: an update February 2025 - disrupting-malicious-uses-of-our-models-february-2025-update.pdf

(PDF file) https://cdn.openai.com/threat-intelligence-reports/disrupting-malicious-uses-of-our-models-february-2025-update.pdf

Related Posts: