Meta announces technology that uses AI and non-invasive magnetic scanners to predict input text from brainwaves with up to 80% accuracy

Meta's Fundamental Artificial Intelligence Research Lab (FAIR), which is focused on enabling

Using AI to decode language from the brain and advance our understanding of human communication

https://ai.meta.com/blog/brain-ai-research-human-communication/

Meta develops 'hat' for typing text by thinking — uses AI to read brain signals for keypresses | Tom's Hardware

https://www.tomshardware.com/tech-industry/artificial-intelligence/meta-develops-hat-for-typing-text-by-thinking-uses-ai-to-read-brain-signals-for-keypresses

Meta's Brain2Qwerty AI Model Turns Brain Signals Into Text as A Non-Invasive Alternative to Musk's Neuralink - WinBuzzer

https://winbuzzer.com/2025/02/09/metas-brain2qwerty-ai-model-turns-brain-signals-into-text-as-a-non-invasive-alternative-to-musks-neuralink-xcxwbn/

Building on previous research into decoding image and speech recognition from brain activity, Meta has announced a study that ' decodes sentence generation from non-invasively collected brainwaves . In this study, they were able to accurately decode up to 80% of characters and reconstruct complete sentences from brain signals alone.'

Another study published by Meta details how AI can help understand brain signals, explaining ' how the brain effectively translates thoughts into sequences of words .'

Every year,

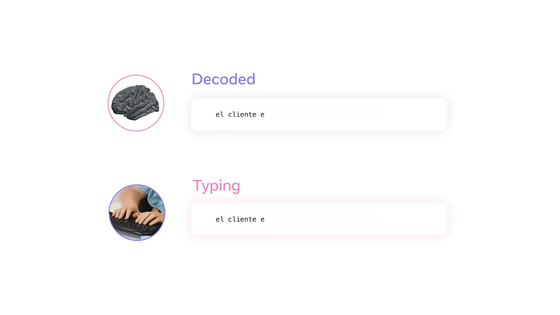

In the first study published by Meta, they used MEG and EEG , non-invasive devices that measure magnetic and electric fields caused by neural activity, to record 35 healthy volunteers as they typed sentences, then trained a new AI model to reconstruct the sentences from the brain signals alone.

The AI model was able to decode up to 80% of input sentences from MEG recordings of participants' brainwaves as they typed, which is at least twice as good as EEG-based predictions.

This research could pave the way for the development of non-invasive brain-computer interfaces that can help restore communication skills in people who have lost the ability to speak. However, there are several important challenges to apply this approach to clinical practice. The first challenge is that 'decoding performance is still imperfect,' and the second is that 'MEG requires subjects to stay still in a magnetically shielded room.' In other words, it is difficult to use. And the third problem is that 'further research is needed to explore how it actually benefits people suffering from brain damage.'

In their research into how the brain effectively converts thoughts into a sequence of words, they faced a simple technical problem: moving the mouth and tongue severely impaired neuroimaging signals. To explore how the brain converts thoughts into a complex series of motor actions, the team used AI to decode signals detected by MEG while subjects were typing. By taking 1,000 brain snapshots per second, they were able to pinpoint the exact moment when thoughts were converted into words, syllables, and letters.

'Importantly, this work also sheds light on how the brain coherently and simultaneously represents successive words and actions,' Meta writes. Deciphering the neural code of language is one of the great challenges of AI and neuroscience. Because our unique human linguistic abilities give us the ability to reason, learn, and accumulate knowledge that is not found in any other animal on Earth, 'understanding the neural architecture and computational principles is a key path to developing AMI,' Meta writes.

Meta wrote that these studies 'would not have been possible without the close collaboration we have fostered in the neuroscience community.' In addition, Meta announced that it would donate $2.2 million (about 3.3 billion yen) to the Rothschild Foundation Hospital to support further research.

Related Posts: