ByteDance's AI 'OmniHuman-1' has been released, which can create realistic deep fake videos at a level that is unbelievable

China's ByteDance has announced ' OmniHuman-1 ,' an AI system that can output realistic videos of people speaking, singing, and moving naturally from a single photo.

omnihuman-lab.github.io/

OmniHuman: ByteDance's new AI creates realistic videos from a single photo | VentureBeat

https://venturebeat.com/ai/omnihuman-bytedances-new-ai-creates-realistic-videos-from-a-single-photo/

Deepfake videos are getting shockingly good | TechCrunch

https://techcrunch.com/2025/02/04/deepfake-videos-are-getting-shockingly-good/

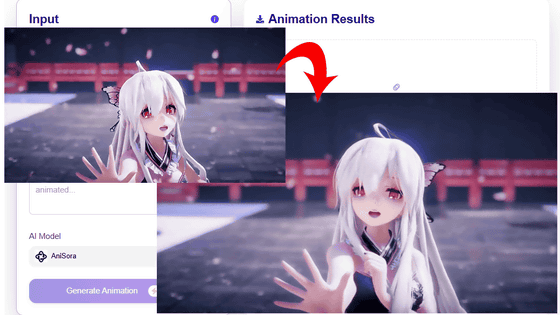

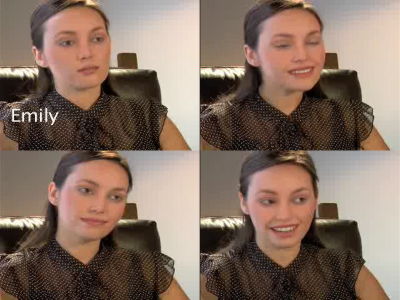

ByteDance announced OmniHuman, an end-to-end multimodal conditional human video generation framework, on February 3, 2025. This model can generate a person's video from a single image of a human and motion signals, such as audio only, video only, or a combination of both.

In benchmarks, it outperformed previous models in areas such as quality and realism.

According to the announcement, OmniHuman uses a combined approach of multiple inputs, including text, voice, and body movements, and is trained on more than 18,700 hours of video data of people.

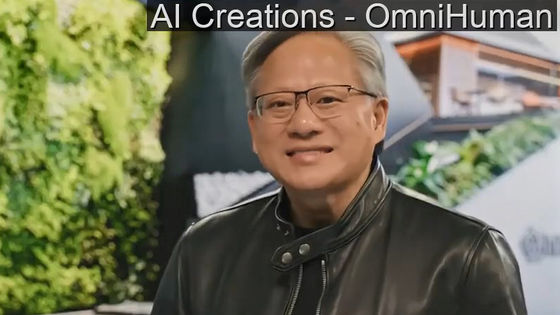

Below is an example of a video generated by OmniHuman. NVIDIA CEO Jensen Huang, whose trademark is his leather jacket, sings a light-hearted rap.

OmniHuman-1 AI Video - sing1 - YouTube

And here's a fictional Taylor Swift performance, showing that it's possible to generate videos of any aspect ratio.

OmniHuman-1 AI Video - sing8 - YouTube

Furthermore, unlike previous models which often only allowed movement of the face or upper body, this model allows the entire body to move.

ByteDance OmniHuman-1 - YouTube

From a famous black and white photo of Einstein, a video was generated that looks as if he were actually giving a lecture, which is very realistic, except for the chalk he is holding, which becomes a fuzzy object.

ByteDance OmniHuman-1 sample - YouTube

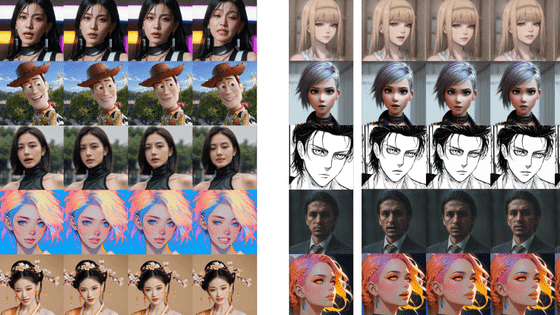

It can also generate videos with tones similar to illustrations, anime, and 3DCG.

— Rohan Paul (@rohanpaul_ai) February 4, 2025

OmniHuman's mixed-conditional training approach allows it to drive video based on audio, mimic other video footage, or combine both. Check out the comparison video below to see how it works:

Video sample generated by OmniHuman-1 - YouTube

While such advances in AI models are expected to bring innovation to video content, there are also concerns that the impact of deep fake videos for inflammatory and fraudulent purposes will intensify. For example, in the 2024 Taiwan election, it has been reported that groups affiliated with the Chinese Communist Party spread AI-generated content that supported pro-China candidates (PDF file) .

VentureBeat, an IT news site, said about OmniHuman, 'Industry experts say the technology could transform entertainment production, educational content creation and digital communications. But doubts have also been raised about the potential for misuse to create synthetic media for deceptive purposes.'

Related Posts: