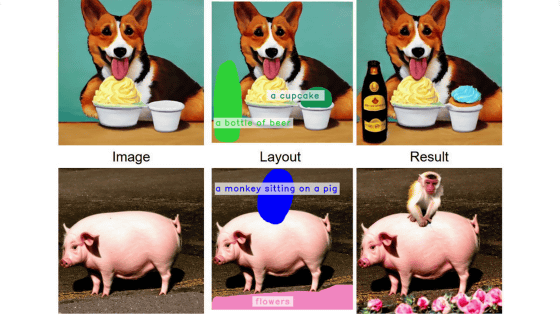

'TokenVerse' uses AI to combine different elements extracted from multiple images to generate a single image

A research team at Google DeepMind has announced a method called ' TokenVerse' that extracts specific visual elements and attributes from an image and uses AI to combine elements extracted from multiple images to generate a natural image. The research team explains on their website what kind of images can be generated using TokenVerse.

[2501.12224] TokenVerse: Versatile Multi-concept Personalization in Token Modulation Space

TokenVerse

https://token-verse.github.io/

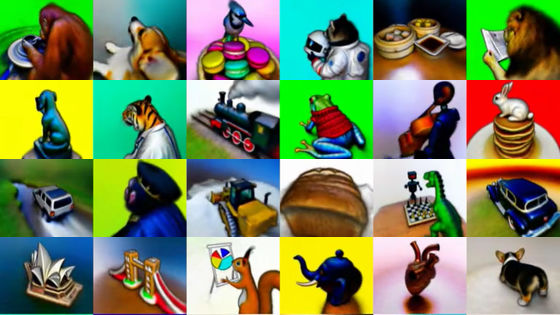

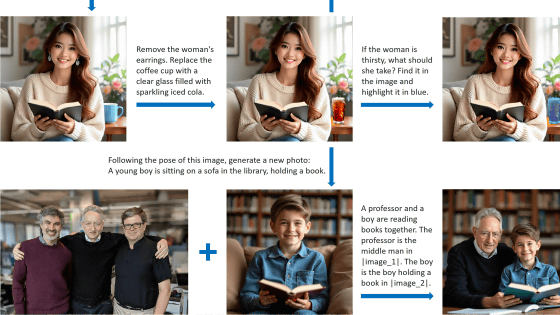

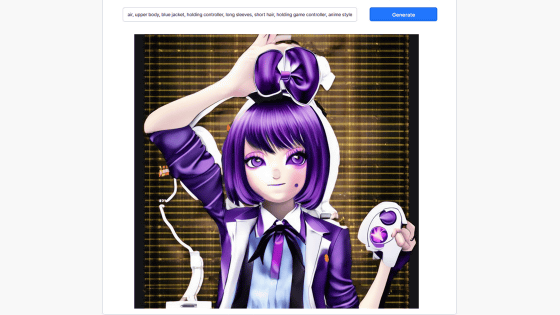

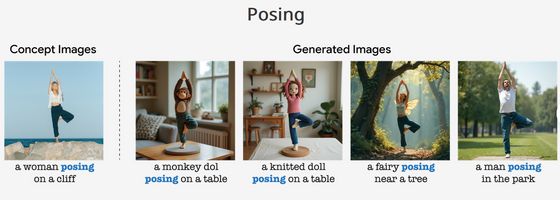

TokenVerse uses a model that generates images from text based on the Diffusion Transformer (DiT), which combines a diffusion model and a transformer, and extracts elements such as objects, accessories, poses, and lighting from images according to the input text. It can then combine the elements extracted from each image to generate a new image.

The research team explains the process of generating images using TokenVerse. For example, the following three images have captions that emphasize elements such as 'dog,' 'glasses,' and 'pattern.'

When these images and the text 'a dog wearing a shirt with a pattern and glasses' were input, the elements 'dog', 'glasses', and 'pattern' were extracted from the images and combined to generate a single image.

Elements that can be extracted include not only the subject and small items, but also the way light hits them.

It seems possible to extract special effects such as fog covering the screen.

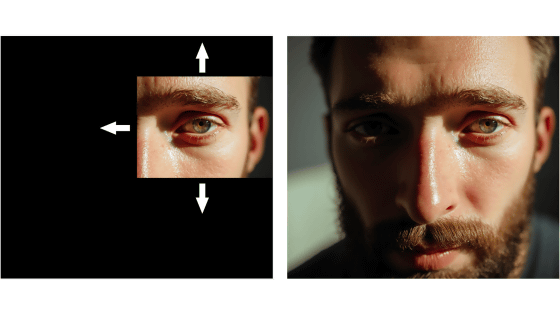

You can also extract only the pose and have the subject extracted from another image pose in the specified way.

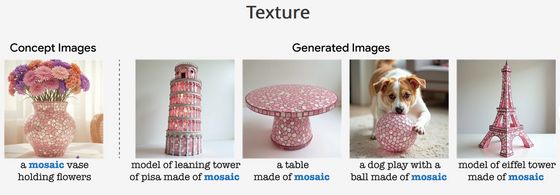

It is also possible to extract only the texture of the object's surface.

The website explaining TokenVerse also provided a demo where you could change the image from which elements are extracted and see how the image generated actually changes.

Related Posts:

in Software, Web Service, Posted by log1h_ik