Cerebras announces the world's fastest AI chip 'WSE-3' equipped with 4 trillion transistors

Cerebras Systems Unveils World's Fastest AI Chip with Whopping 4 Trillion Transistors - Cerebras

https://www.cerebras.net/press-release/cerebras-announces-third-generation-wafer-scale-engine

Cerebras Selects Qualcomm to Deliver Unprecedented Performance in AI Inference - Cerebras

https://www.cerebras.net/press-release/cerebras-qualcomm-announce-10x-inference-performance

Cerebras and G42 Break Ground on Condor Galaxy 3, an 8 exaFLOPs AI Supercomputer - Cerebras

https://www.cerebras.net/press-release/cerebras-g42-announce-condor-galaxy-3

AI startup Cerebras unveils the WSE-3, the largest chip yet for generative AI | ZDNET

https://www.zdnet.com/article/ai-startup-cerebras-unveils-the-largest-chip-yet-for-generative-ai/

Cerebras WSE-3: Third Generation Superchip for AI - IEEE Spectrum

https://spectrum.ieee.org/cerebras-chip-cs3?share_id=8148767

Cerebras Systems Sets New Benchmark in AI Innovation with Launch of the Fastest AI Chip Ever - Unite.AI

https://www.unite.ai/cerebras-systems-sets-new-benchmark-in-ai-innovation-with-launch-of-the-fastest-ai-chip-ever/

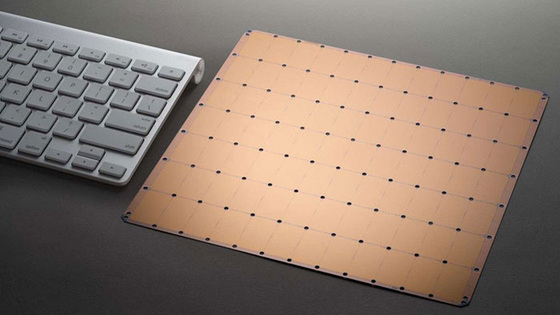

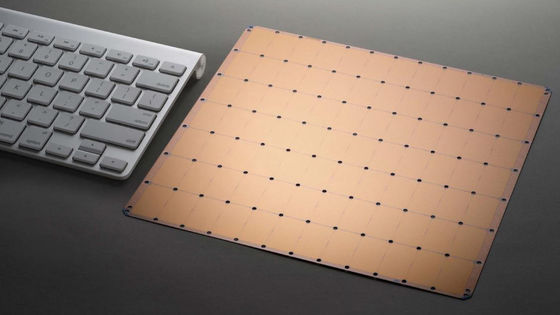

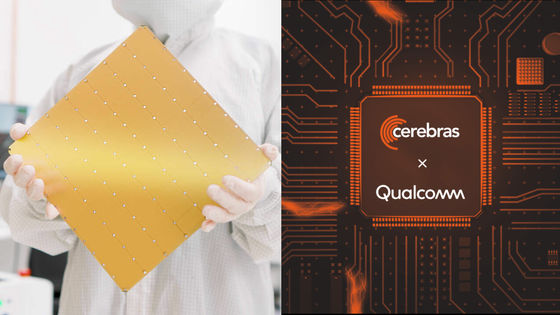

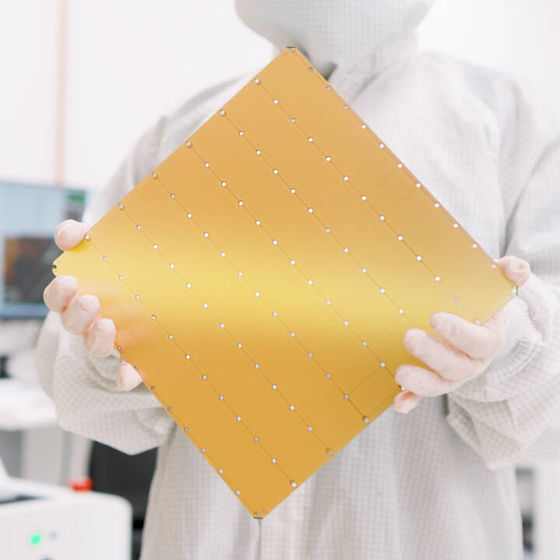

On March 13, 2024 local time, Cerebras, a developer of components for generative AI, announced the WSE-3. This doubles the performance of the WSE-2, which achieved the fastest performance among existing AI chips, while maintaining the same power consumption and selling price.

The main specifications of the WSE-3 are as follows:

Number of transistors: 4 trillion

Number of AI cores: 900,000

Peak AI performance: 125 petaflops

On-chip SRAM: 44GB

Memory Bandwidth: 21PB

Network fabric bandwidth: 214Pbit

Process rule: TSMC 5nm

External memory: 1.5TB, 12TB, 1.2PB

Number of AI models trained: up to 24 trillion parameters

Cluster size: Up to 2048 CS-3 systems (computing systems equipped with WSE-3)

The WSE-3 is a square with sides measuring 21.5 cm, making it the world's largest single chip.

With a memory system of up to 1.2PB, the CS-3 is designed to train next-generation frontier models that are 10x larger than GPT-4 and Gemini. 24 trillion parameter models can be stored in a single logical memory space without partitioning or refactoring, greatly simplifying training workflows and improving developer productivity.

As a result, training a trillion-parameter model on the CS-3 will be as easy as training a billion-parameter model on a GPU. The company also claims that a full-scale CS-3 can train Meta's large-scale language model, Llama 70B, with 70 billion parameters, from scratch in a single day.

According to Cerebras, the real limitations of AI deployment lie in inference and running neural network models. Cerebras estimates that if every person on the planet were to use OpenAI's ChatGPT, it would cost $1 trillion (approximately 150 trillion yen) per year and consume a staggering amount of energy.

Cerebras has announced that it will use Qualcomm's

'This initiative, aimed at ushering in a new era of high-performance, low-cost AI inference, couldn't come at a better time,' said Andrew Feldman, co-founder and CEO of Cerebras. 'Our customers are focused on training the highest quality, cutting-edge models without paying a fortune for inference,' said Feldman. 'Using Qualcomm's AI 100 Ultra will enable us to significantly reduce inference costs without sacrificing model quality, leading to the most efficient deployments available today.'

Cerebras also announced plans to build the AI supercomputer 'Condor Galaxy 3 (CG-3)' in collaboration with G42, a leading technology holding group. CG-3 will be equipped with 64 CS-3 chips, each equipped with the industry's fastest AI chip, the WSE-3, resulting in over 58 million AI-optimized cores and a processing speed of 8 exaflops (EFLOPS).

Cerebras and G42 have previously collaborated on the development of the AI supercomputers Condor Galaxy 1 and Condor Galaxy 2, making CG-3 the third AI supercomputer in the partnership. These three AI supercomputers will enable the entire Condor Galaxy network to reach a combined processing speed of 16 EFLOPS.

The Condor Galaxy AI supercomputer developed by Cerebras and G42 has been used to train industry-leading, cutting-edge generative AI models, including Jais-30B, Med42, Crystal-Coder-7B, and BTLM-3B-8K.

The Condor Galaxy 3 is scheduled for release in the second quarter of 2024.

Related Posts: