Google releases Gemini 1.5, capable of handling up to 1 million tokens and up to 1 hour of movies and 700,000 words of text

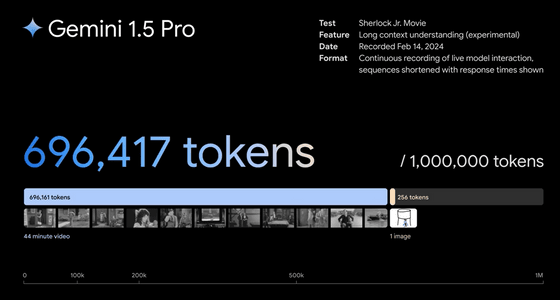

Google has announced Gemini 1.5, the next-generation model of its multimodal AI Gemini, which can process text, images, and movies all at once. The maximum number of tokens that can be processed has been increased to 1 million, and Gemini 1.5 can provide higher quality results with fewer calculations than the previous 1.0 model.

Google Japan Blog: Next-generation model, Gemini 1.5 announced

The conventional model, Gemini 1.0, was released on December 6, 2023 as a 'multimodal AI with performance exceeding GPT-4.' In a hands-on movie using the top-of-the-line model, Gemini 1.0 Ultra, you can see how it responds as if it were a human.

A multimodal AI called 'Gemini' that can process text, voice, and images simultaneously and interact more naturally than humans, surpassing GPT-4, will be released - GIGAZINE

On February 15, 2024, Google announced Gemini 1.5 as the next-generation model of Gemini. At the same time as the announcement, the Gemini 1.5 Pro model was made available as a private preview in AI Studio and Vertex AI . 'Gemini 1.5 Pro can achieve the same performance as Gemini 1.0 Ultra while reducing the computing resources required for operation,' said Sundar Pichai, CEO of Google and Alphabet, 'Dramatic improvements have been made in various aspects.'

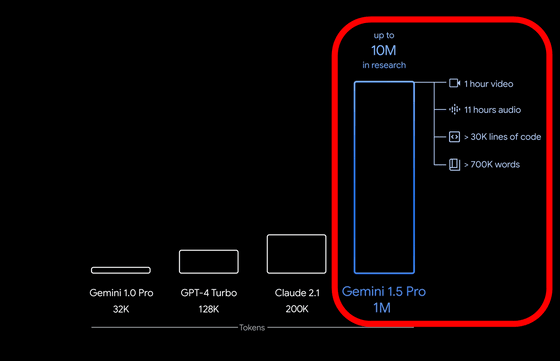

The standard context window for Gemini 1.5 Pro is 128,000 tokens, but some companies and developers selected as early testers can use Gemini 1.5 Pro, which supports up to 1 million tokens. One million tokens is equivalent to 'one hour of movie,' '11 hours of audio,' 'more than 30,000 lines of code,' and 'more than 700,000 words of text.' In the research phase, the system has been successful in processing up to 10 million tokens.

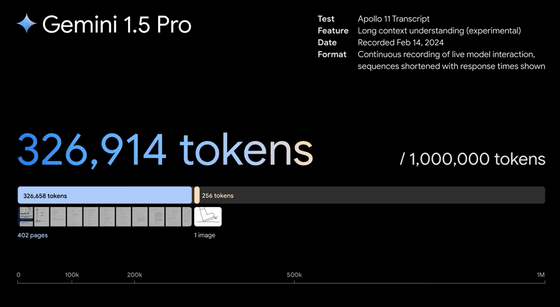

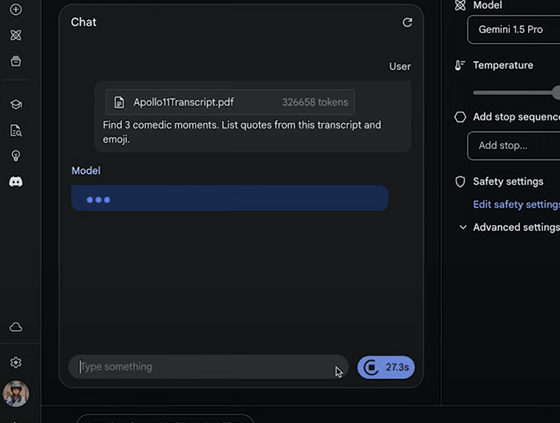

Several demo videos that demonstrate the capabilities of Gemini 1.5 Pro have been uploaded to YouTube. The first one shows the reading of 402 pages of

Reasoning across a 402-page transcript | Gemini 1.5 Pro Demo - YouTube

The 402 page record is about 330,000 tokens.

Upload a PDF file and enter the prompt: 'Find three funny moments, quote them and add emojis.'

I was able to properly extract the 'playful exchange' part.

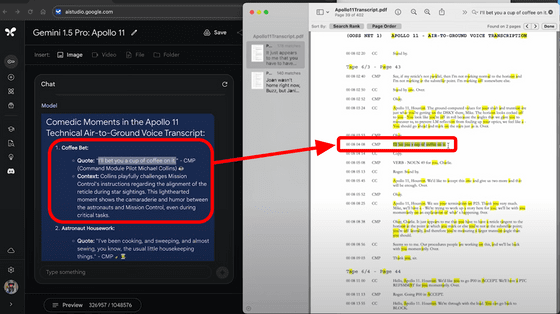

When I prepared an image of someone taking a step and asked, 'What moment is this?' the Gemini 1.5 Pro answered, 'The moment man first walked on the moon.'

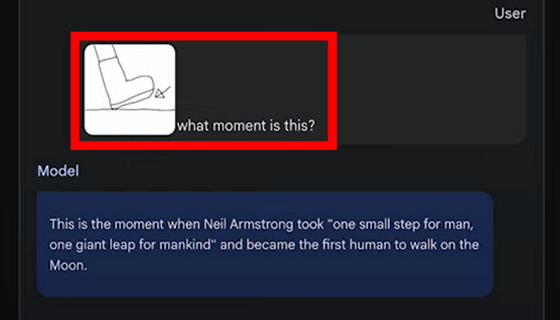

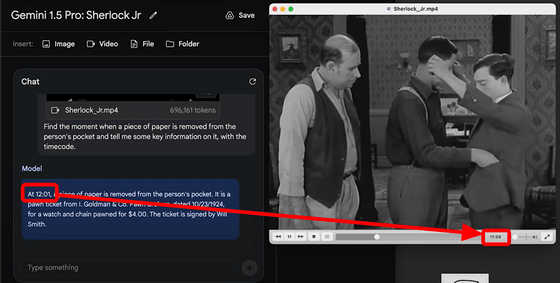

The second example is an interpretation of a 44 minute movie.

A 44 minute movie costs approximately 700,000 tokens.

Give the Gemini 1.5 Pro a video and ask it to 'find the moment when someone takes a piece of paper out of their pocket and give you the time and context.'

The Gemini 1.5 Pro not only told me exactly when the event occurred, but also output information such as the date, people in the movie, and the contents of the paper.

Based on the handwritten image, we can also respond by asking, 'What time did this happen?'

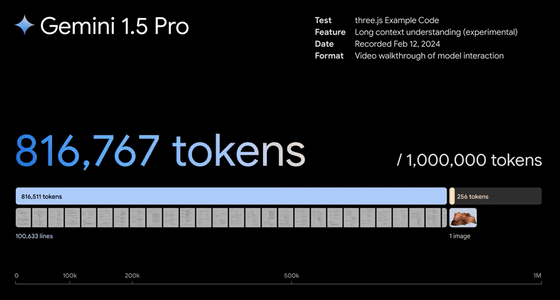

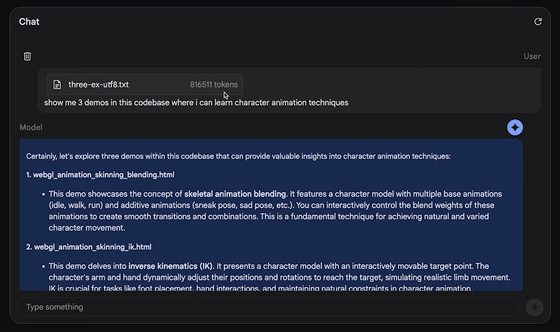

The final part involves reading over 100,000 lines of code.

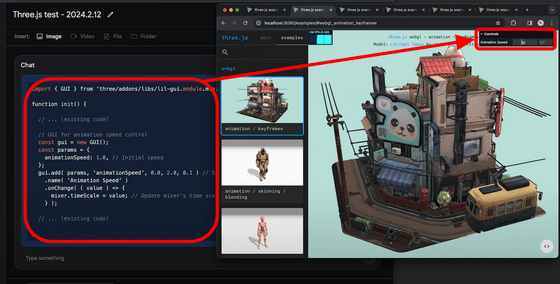

The number of tokens is about 820,000. The sample code of three.js is used.

If you ask, 'Show me three demos included in the code that can help me learn about character animation techniques,' they will introduce you to the demos with explanations.

You can also request code changes via text, like 'add a slider to adjust the animation speed,' and it will generate the appropriate code.

Developers who want to try Gemini 1.5 Pro can join the AI Studio waitlist, while businesses are asked to contact the Vertex AI account team.

Google says that once the model is ready for wider release, it will publicly release Gemini 1.5 Pro with a context window of 128,000 tokens, with plans to subsequently scale up the context window to 1 million tokens as it improves the model.

Related Posts:

in Software, Web Service, Posted by log1d_ts